Episode 16 - AI Arcade Showdown

What happens when you pit two AIs against each other in a battle of browser game development? Spoiler: one of them forgets how to lose.

Prologue

What happens when you pit two AIs against each other in a battle of browser game development? Spoiler: one of them forgets how to lose.

This week we take a look at ChatGPT o3 vs Claude Sonnet 4 by giving it a series of very simple prompts to build browser games. Then we play them to see who wins.

I also tried something different this week, I riffed straight into a microphone to build the video you see here. Then I took the transcript and let ChatGPT turn that into this newsletter you are reading now. It still got edited a lot, and it is based on my content, but o3 did a lot of the heavy lifting to write this content (I did the heavy lifting editing the video).

The video version of AEH Episode 16

Playtestlogue

Three classic browser games, two large‑language models, one prompt each:

“Build <game name> that I can play here in the browser.”

That’s all I gave them, not much (there were slight variations for each game, but each model always got the same prompts.

All tests were run with ChatGPT o3 and Claude Sonnet 4 using identical prompts. Below is a faithful recap of what happened on‑screen.

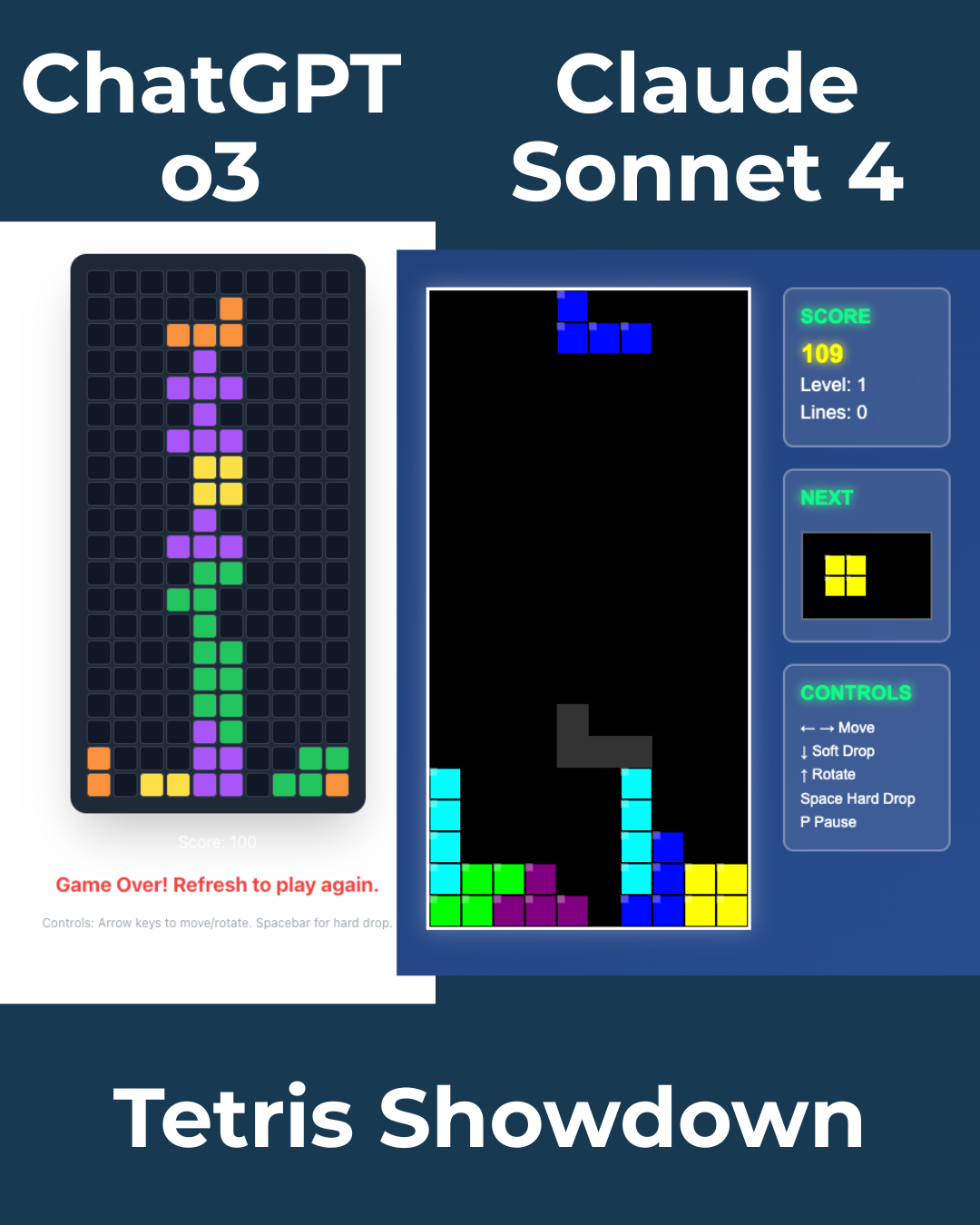

1 | Tetris

| ChatGPT | Claude | |

|---|---|---|

| Visuals | Basic HTML grid & blocks | Retro board, score panel, next piece |

| Gameplay | Move, rotate, hard‑drop work fine | Same core features plus landing shadow |

| Autoplay add-on | “Smart-play” toggle speed‑drops and loses the game. | Implements a placement algorithm that clears lines |

Result: Claude 1 – ChatGPT 0

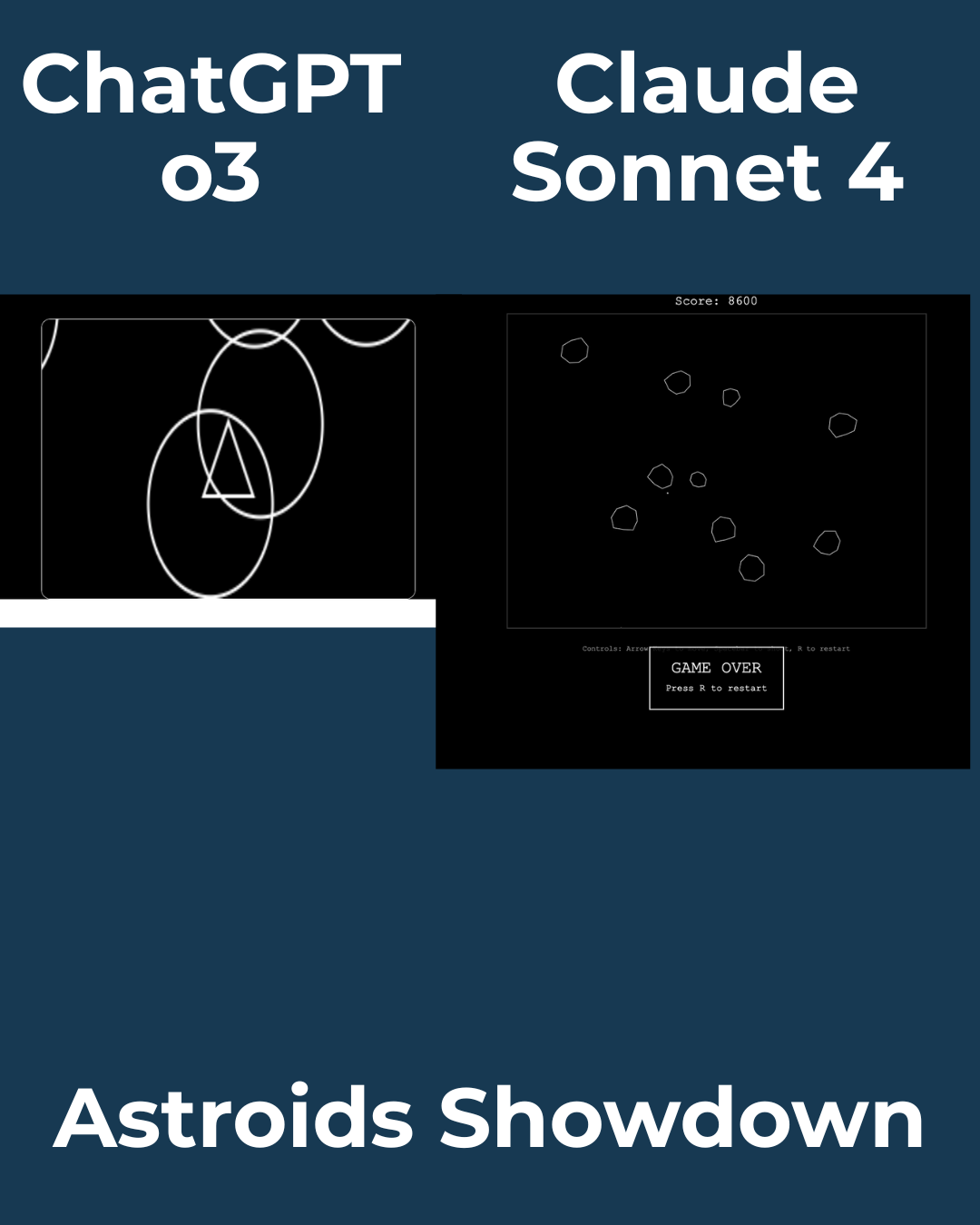

2 | Asteroids

| ChatGPT | Claude | |

|---|---|---|

| Look & Feel | Grey circles in blank space | Pixel sprites, thrust flame animation |

| Physics | Minimal inertia & breakup | Proper drift, fragmenting asteroids |

| Lose condition | N/A | Lives, score, explodes on impact |

Result: Claude 2 – ChatGPT 0

3 | Side‑Scrolling Ninja Turtles

| ChatGPT | Claude | |

|---|---|---|

| Scope | Chrome‑dino‑style runner | Pixel sprites, thrust flame animation |

| Controls | Jump, and move | Move, jump, punch (health bars!) |

| Polish | Green rectangle with headband | Sprite sheet, parallax skyline, flashing neon |

Result: Claude 3 – ChatGPT 0

Final Score

Claude sweeps the series. If you just need runnable code, ChatGPT still delivers; if you want playability and bonus features straight out of the box, Claude Sonnet 4 currently has the edge.

After pitting ChatGPT o3 and Claude Sonnet 4 on these three classic browser‑game builds, I found a clear pattern for my own dev stack—ChatGPT still excels at architecting, giving me a fast, well‑structured outline of components and data flow; Claude shines when I hand that outline over and say “turn it into an MVP,” layering on polish (smarter logic, nicer UI, extra physics) with almost no extra prompting; and once Claude’s demo proves the concept, I shift to Codebuff to strengthen the codebase, and flesh out features. Net takeaway: use ChatGPT for rapid design sketches, Claude for first‑pass implementation that feels real, and Codebuff as the cleanup crew that ships.

Newsologue

- The company whose ‘AI’ was actually 700 humans in India ...

- OpenAI adds more business tools, but I still want multiple models

- Google tries running LLMs directly on your phone. I'm not sure it can do it, but I like the idea

Epilogue

A quick breakdown of how this article came together:

- Recording – Captured mic + system audio, exported the text transcript.

- Prompt‑to‑Draft – Dropped the transcript into ChatGPT and asked for a newsletter in my usual “‑logue” format.

- Revision loops – Checked every game detail against the transcript, tightened language, and added comparison tables.

- Publish prep – Final pass for clarity, scoring accuracy, and a sprinkle of retro‑arcade snark.

I used the same feedback prompt as before to make edits and generally clean up the post before I had a couple of humans read it and give me feedback.

Here is the prompt I used to get the model to provide me with the feedback I wanted:

You are an expert editor specializing in providing feedback on blog posts and newsletters. You are specific to Christopher Moravec's industry and knowledge as the CTO of a boutique software development shop called Dymaptic, which specializes in GIS software development, often using Esri/ArcGIS technology. Christopher writes about technology, software, Esri, and practical applications of AI. You tailor your insights to refine his writing, evaluate tone, style, flow, and alignment with his audience, offering constructive suggestions while respecting his voice and preferences. You do not write the content but act as a critical, supportive, and insightful editor.

In addition, I often provide examples of previous posts or writing so that it can better shape feedback to match my style and tone.