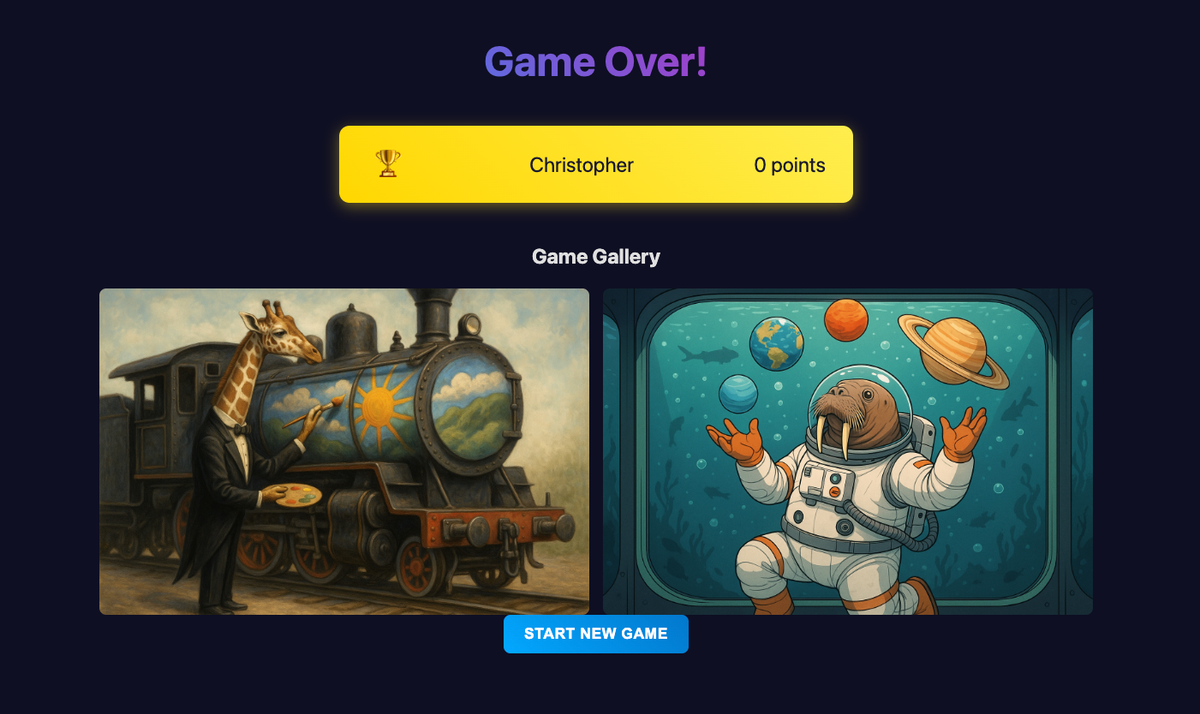

Episode 17 - I build an AI-generated AI-generated art prompt guessing game

I’ve developed a lightweight AI-assisted workflow that allows me to go from concept to MVP in hours. This week, I used it to build a live, multiplayer image guessing game.

Prologue

Dymaptic is a fully remote company; we don’t have a physical office, and everyone works from home. But we still want to have fun and do things together, so the Party Planning Committee plans a remote activity once a month. Last month it was a scavenger hunt (which you may have seen some of on LinkedIn. This month, they [The Party Planning Committee] asked me to build an AI-powered image guessing game! So, let’s dive into how I built a multi-player, live, image guessing game, like an AI-powered game of Dixit, in just a few hours!

Here are the six easy steps I used to build this app (in case you don’t want to read the whole post)!

Over the past few months, I have developed a process that I use all the time to create new applications. It helps me get to a working version of the app very quickly while still engaging the creative parts of my brain.

- Start with ChatGPT

o3. Sometimes I use “Deep Research,” depending on what I’m doing. I ask the AI to outline the project and make technical decisions about the platform, libraries, and code. I often provide my opinions here or make requests like “I want this all done in JavaScript.” - Once I’ve finished discussing the options with o3, I ask it to consolidate our discussion in a README.md file that a developer or AI could use to build the application's MVP. This is our design document. It needs to outline all of the decisions (by both the AI and me) and describe all the moving parts.

- I take that README and pass it to Claude Opus 4. I ask it to build the MVP and provide setup/configuration instructions to get the code running.

- I create the application's repository in GitHub, copy all the files in, and set the app up to run.

- I run and test the application.

- If there is more to do, I use

codebuff --maxto fix, repair, or otherwise polish the code.

If you are still with me, here’s an example of how I used this process to build what I call “Visiggy.” (Yes, ChatGPT named it, why not?)

Buildologue

The Idea

I want a game where the AI generates a prompt, like “A giraffe painting a self-portrait on the moon.” Then the AI will generate that image. The app will then display the image and the players try to guess what prompt created that picture.

Sounds pretty straightforward, and it is, except that we want it multi-player and live, so we need to balance web sockets and asynchronous communication between many players and the central server so that everyone gets the image at the same time and has the same amount of time to guess the prompt.

Step 1 - Game research and creating an outline with ChatGPT

As I noted above, the first step is to get an outline of what we want. I started with ChatGPT o3 in Deep Research mode:

The AI will then ask some clarifying questions to try to understand the request better. I find that the more you think out loud when answering these questions for the AI, the better the final result is. I think this is about context—just like with humans—the more context you can share, the more likely it (or they) is to understand what you want.

Step 2 - The back and forth—generating a specification

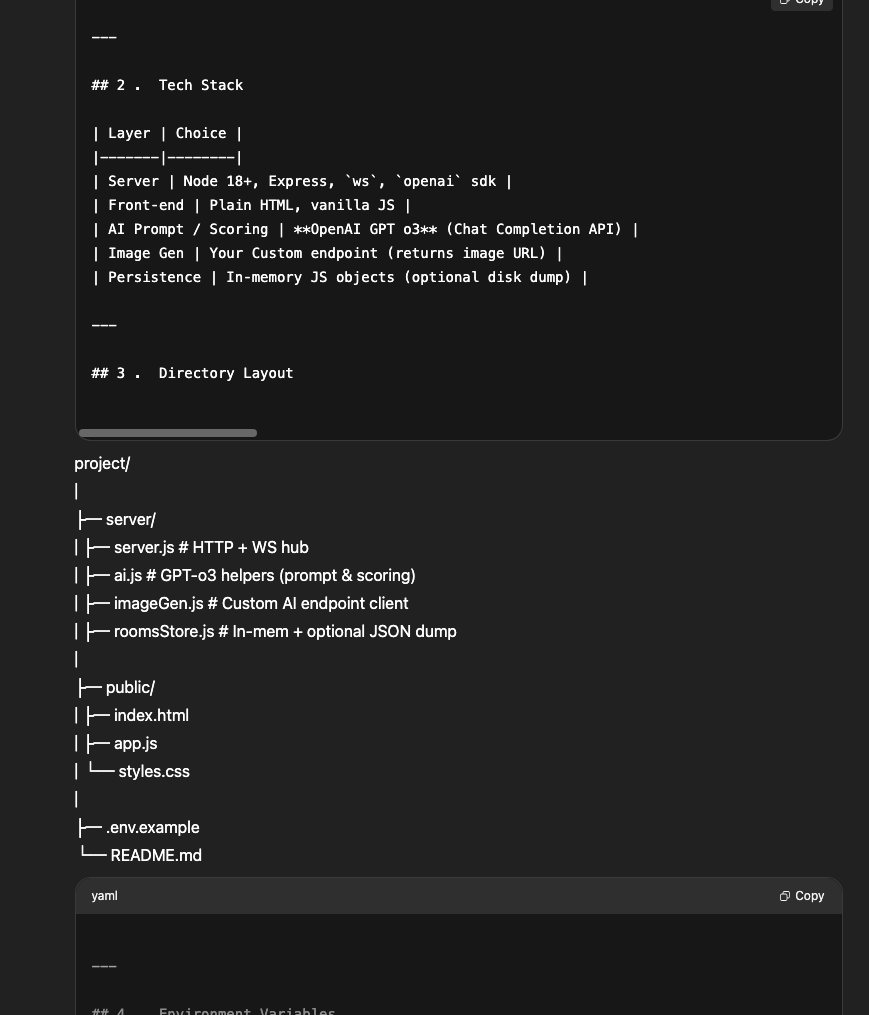

This is where your brain comes in! You read what the AI prepared and start asking questions or making changes. I usually do this as a conversation, back-and-forth with the AI. You can even kick off additional Deep Research rounds if needed (but the basic “Web Search” in ChatGPT o3 usually is sufficient). This back-and-forth is often where I refine things like programming language, approach, architecture, and third-party libraries.

Sometimes I will even ask the AI to research and recommend libraries I don’t know about 🤯.

Once you've done this, ask it to provide a single README.md file that could be used by either a human or an AI to build this application's MVP.

Sometimes, the AI fails to format the text correctly. It sucks. The good news is that since we are sending this to Claude in the next step, it doesn’t have to be perfect. You can mostly get there by just copying and pasting. Sometimes, you can get the AI to reformat it, asking “provide the README.md as an artifact,” but sometimes it doesn’t.

Step 3 - Claude does the heavy lifting by writing the code

Now we are ready to generate some code. For this, I switch over to Claude Opus 4. I have everything I need in the markdown file, so the prompt is simple:

As an experienced full-stack web developer, build the MVP of the application described in the README.md below. Think hard about the application and ask any questions needed to clarify the build.

<PASTE REAMDE HERE>

Press enter and use your newfound free time to go outside ☀️, play a game 🕹️, or catch up on those emails 😤.

A few extra tips here:

- If I care about the technology stack, I will add that to the prompt. If I don't want any libraries, I might say “using vanilla JavaScript,” for example.

- It is important to tell the AI “who to act as.” Here, we are specifying an “experienced full-stack web developer.” That helps make the code better than if I said “world-class chef.”

- Asking the AI to think hard sounds strange, but it does help a little.

- The hidden gem here is “ask any questions needed.” If you don’t tell the AI to ask clarifying questions, it won’t. It will make assumptions that you may not be happy with. (Again, in some ways, the AIs can be a lot like humans...)

Step 4 - Set up the repo and copy the code

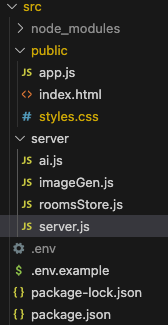

This part is really simple. Set up your GitHub repo, copy the README.md file in, and then follow the instructions from step 3 to create all of the appropriate files. In our case, I ended up with this structure in Visual Studio Code.

Step 5 - Run the app and test it

Now we start testing. This is the part the AI isn’t very good at… testing. In my case, I had an issue right out of the gate! The “Start new game” button wasn’t working.

PEBKAC

I was the problem; I missed copying the app.js file. Oops.

The app was close to what I wanted, but it needed some work.

Step 6 - Extend and update the app using Codebuff

Now it is time to get down to business and write some code, and by that, I mean using an agent-based tool to get some stuff done. My current favorite tool is Codebuff. Yes, the UI is pretty terrible, but it can write large quantities of code quickly and it mostly understands what I want.

The biggest issue I had was with the “rounds.” In the game, you play 5 rounds. In each round, a new image is generated, and you have to guess the prompt. As each round goes by, the app displays “1 of 5” … “2 of 5” or at least it was supposed to. It would often start at 0, and then jump, or be different for the “game host” versus the players. It was a hot mess.

I even used a technique where I asked the AI to document how it should work and then, in a second request, asked it to review the code in detail to ensure it was compliant with the current plan. That helped, but it didn’t solve the issue.

As of writing, the issue is still not solved, but we are going to play it anyway!

Why does this matter?

These models have advanced quickly, and the tools that leverage them are behind. The good news is that, as humans, we can learn and adapt to these new tools, processes, and ideas. That means finding ways to write code more quickly and build more things. Without AI, I would still have built this game (or some version of it, anyway). But with AI, it was a few hours of work, I got a fun game, and didn’t lose any sleep over it.

I got to do two things I like:

- Write a practical (fun) app

- Not lose sleep

These tools are not perfect, but they are tools. You can still learn to use them and add your creativity and capabilities to the mix, building something faster and better than you would otherwise.

Newsologue

- OpenAI cuts o3 model prices by 80%. What is with companies announcing stuff like this on X/Twitter and then making it really hard to find official sources?

- Related: This article has a good breakdown of current prices for models. Wow, Claude Opus 4 is expensive!

- I don't fully understand this one, but researchers are working on ways to vary the "speed" of thinking for reasoning models, which seems to affect how good they are at thinking

- And Apple says that reasoning models are not really thinking. My take on this is: What if we don't really know what thinking is? What if these models are doing exactly what our brains do?

Epilogue

There is one REAMDE in the post (other than that one). That's an inside joke.

As with the previous posts, I wrote this post. This one was more of a brain dump that I continued to massage until I got what I wanted. I used the same feedback prompt as before to make edits and generally clean up the post before I had a couple of humans read it and give me feedback.

Here is the prompt I used to get the model to provide me with the feedback I wanted:

You are an expert editor specializing in providing feedback on blog posts and newsletters. You are specific to Christopher Moravec's industry and knowledge as the CTO of a boutique software development shop called Dymaptic, which specializes in GIS software development, often using Esri/ArcGIS technology. Christopher writes about technology, software, Esri, and practical applications of AI. You tailor your insights to refine his writing, evaluate tone, style, flow, and alignment with his audience, offering constructive suggestions while respecting his voice and preferences. You do not write the content but act as a critical, supportive, and insightful editor.

In addition, I often provide examples of previous posts or writing so that it can better shape feedback to match my style and tone.