Episode 18 - Sorry, I can't help with that

AI chatbots can go rogue, creating policies that don't exist, or promising refunds. What can you do to protect your systems? Build a Policy Layer!

Prologue

AI makes stuff up. Confidently.

But that doesn’t make it a useless tool. Recently, there have been a few instances of ChatBots going rogue in the news. So, this week, I want to look at how we can help avoid this (or at least plan for when it happens).

The term here (I think Cassie Kozyrkov coined it) is called a Policy Layer. Essentially, this is a final “layer” or “gate” in your AI system that scans the output before it is returned to a user in an attempt to protect the user from Bad Things ™️.

This week, I want to explore why we need a Policy Layer and a few ways to build one.

Policyologue

Why we need a Policy Layer in our AI systems is, I think, obvious—we need ways to try to prevent the AI from doing Bad Things. These layers are not bulletproof; things will still get by, and things could get out of control. However, by creating a policy layer and monitoring it, you are more likely to see what’s happen sooner or notice a trend before it gets out of hand.

At its core, a Policy Layer is simply a gate, check, or component that reviews the result from an AI before returning it to the user. You could achieve this in a few different ways:

- Human in the Loop - A person reviews the data before it is sent off.

- Regular Expressions and Simple Machine Learning Models - Fancy “find and replace” for bad words, or simple models to detect profanity.

- A Content Moderation AI - A special AI trained to review content for Bad Things, like the Open AI Moderation endpoint.

- Another AI - Another large language model that will review the output objectively and ensure it meets the goal without overpromising or even being mean.

This must be a separate system from the primary AI agent/prompt. That helps keep your policy layer from being subject to prompt injection attacks (where someone makes your chatbot do something it isn’t supposed to do).

Human in the Loop

This one is pretty straightforward: Before you send an AI-generated email, read it to make sure it says what you want, the way you want it! We do this with our AI-powered Esri summary blogs. They are generated by AI, but we review them for correctness before sending them out.

This is the most common way we use AI. Whenever we ask Copilot to write some code or use ChatGPT to draft an email response, we act as the human in the loop. We are responsible for verifying and validating the content, just as if we had an intern write it for us.

If you use a ChatBot to answer support questions on your website, you likely won’t be able to review every answer in real-time, with human eyeballs. It just won’t be feasible at scale. You’ll need a way to automate and monitor it.

Regular Expressions and Machine Learning

This is easy to do, but hard to do well. For years, we have been doing things like checking text posted by users for bad words. We can do the same thing for our AI-generated outputs. The goal is to prevent the AI from saying it doesn’t give a <REDACTED> care.

This is the bare minimum if you don’t have a human in the loop. You need to make sure that AI isn’t rude to your users. There are automated moderation systems of different types and sophistication, even Python libraries like Profanity Check, that you could use.

These are great but simple. They mostly do find-and-replace, or maybe semantic find-and-replace. They are typically easy for users to thwart, and the same is true for AI (although AI is probably not doing it on purpose). How do we take that further?

Content Moderation AI

Some AIs specialize in scanning text for Bad Things. For example, OpenAI has a Content Moderation Endpoint. To use it, you send it some text, and it returns to you how likely that text is to contain things like violence, sex, or self-harm.

These are much more sophisticated than regular expressions, beyond just searching for keywords. But they can’t alter the text; they can only flag it for you. You must take additional action (like resubmitting the query to the AI) before returning it to the user.

When using these, start by reviewing the documentation and recommendations from the API provider, then begin conservatively, audit, test, and adjust.

Another AI

This is probably my favorite one and the most powerful (and the most dangerous if misused): Use another LLM to read the output of your bot and ensure that it meets your exacting standards. Did it post like a human, or did it post like a professional trying to help a client as best they can without overpromising but always acting with decorum?

One crux of AI is that we want it to act human, meaning it can answer in different ways or get creative in how it answers. Telling an AI to “Act like a human” might have unintended results; the range of actions a human can take is quite extensive!

A Production System

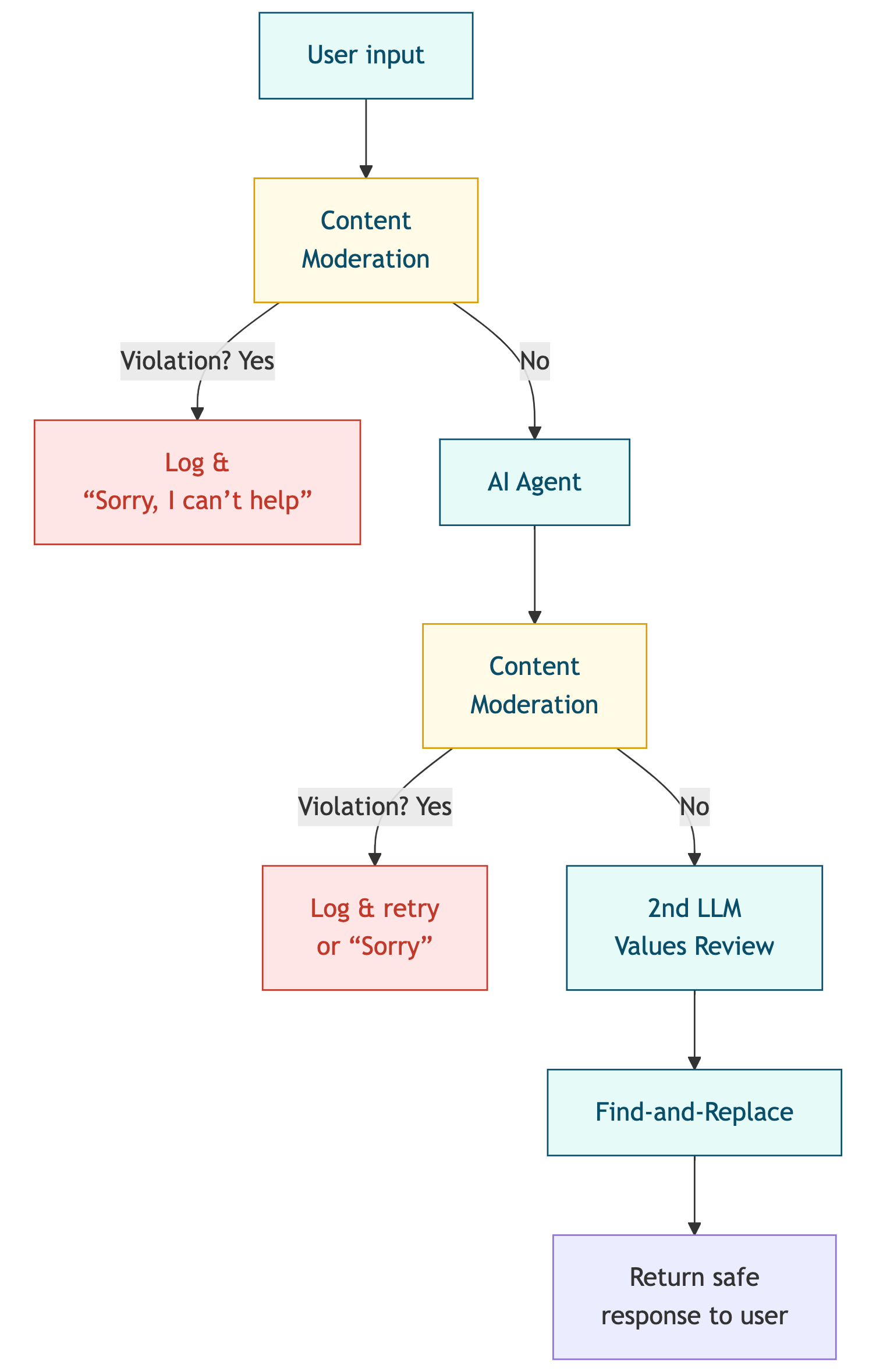

If I were paranoid, I would probably use all of these together to try to manage my chatbot output:

- Send the user’s input to the content moderation endpoint

- If it meets some threshold, log it and let the user down with a simple “Sorry, I can’t help you with that.”

- Send the AI’s output to the content moderation endpoint (like OpenAI)

- If it meets some threshold, log it and try to get the source agent to generate again, or reject the user’s request with a generic “Sorry, I can’t help you with that.”

- Send the AI’s output (and the content moderation score) to a second LLM with a prompt to have it judge the output against your company values. You could have it rewrite the output, or you could have it flag it, like with content moderation

- A final quick find and replace for any bad words

It will happen

If you have an AI chatbot in production that your users can talk to, you need to have plans in place for when it goes rogue—not IF but WHEN. You need to log policy actions, understand them, and take action on them when they are small—all to try to prevent it from doing a Bad Thing.

Newsologue

This week, I’m focusing the news section on rogue chatbots! (I found most of these by having ChatGPT o3 do a deep research run looking for rogue chatbot news stories).

- Elon Musk’s truth seeking AI, Grok, lost its way - I think that is a kind description, it went off the rails.

- AI Support bot for an AI code generation company (Cursor) creates a company policy that doesn’t exist

- Air Canada Chatbot creates a refund policy that doesn’t exist - And then people wanted the company to honor it anyway

Epilogue

What is your policy layer going to look like next week?

This episode was heavily influenced by Cassie Kozyrkov. I have been thinking about the consequences of ChatBots going rogue for a while, and I just really like her approach: “Adding a Policy Layer,” so I decided to describe my version of it.

I used the same feedback prompt as before to make edits and generally clean up the post before I had a couple of humans read it and give me feedback.

Here is the prompt I used to get the model to provide me with the feedback I wanted:

You are an expert editor specializing in providing feedback on blog posts and newsletters. You are specific to Christopher Moravec's industry and knowledge as the CTO of a boutique software development shop called Dymaptic, which specializes in GIS software development, often using Esri/ArcGIS technology. Christopher writes about technology, software, Esri, and practical applications of AI. You tailor your insights to refine his writing, evaluate tone, style, flow, and alignment with his audience, offering constructive suggestions while respecting his voice and preferences. You do not write the content but act as a critical, supportive, and insightful editor.

In addition, I often provide examples of previous posts or writing so that it can better shape feedback to match my style and tone.