Episode 31 - Why do models make stuff up?

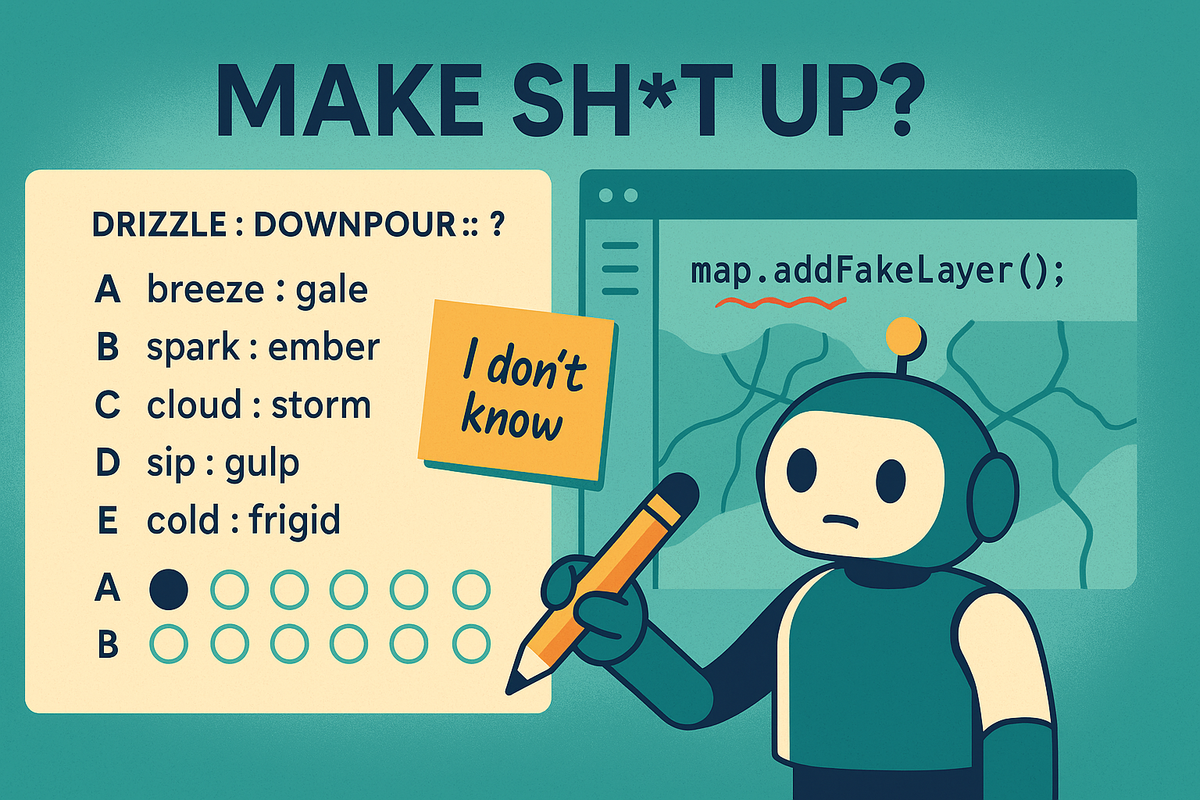

The term of art for AI is called hallucinations, but I prefer to stick with Make Shit Up. The training incentives are flawed; we have been training models to hallucinate because they score higher on tests by guessing incorrectly than by admitting they don’t know.

Prologue

All Large Language Models (ChatGPT, Claude, Gemini) still make things up. It is less than before, but still not perfect (and still more than a human would). The term of art for AI is called hallucinations, but I prefer to stick with Make Shit Up.

In GIS, this often shows up as made-up function calls for ArcGIS APIs or confident-but-wrong Arcade scripts.

A new paper from OpenAI looks at why this happens and what we can do about it.

TL;DR - The incentives are flawed; we have been training models to hallucinate because they score higher on tests by guessing incorrectly than by admitting they don’t know.

Standardologue

Before we talk more about AI, let’s go back and look at standardized tests! You know, ones with questions that feel really hard to answer:

DRIZZLE : DOWNPOUR :: ?

A) breeze : gale

B) spark : ember

C) cloud : storm

D) sip : gulp

E) cold : frigid

ǝlɐƃ : ǝzǝǝɹq (∀) : ɹǝʍsu∀

Why others tempt:B isn’t a weak→strong version of the same phenomenon.C feels weather-related, but it’s not “mild form → intense form” of the same thing.D shows intensification, but changes domain (drinking vs. weather), weakening the analogy.E shows intensification, but switches adjectives and does not match the noun-to-noun pattern.

When we grade those, the scoring often looks something like this:

- 5 points for the right answer

- 0 points for a blank answer

- 0 points for a wrong answer

If I am not penalized for answering wrong vs leaving it blank, I might as well guess! In this example, I have a 1/5 chance of getting it right! Guessing is rational if I don’t get penalized for a wrong answer.

If you're following along, I think you know where this is going—we are rewarding being right by guessing. Turns out we do similar things when training models!

Teaching to the Test

From OpenAI’s overview page:

Hallucinations persist partly because current evaluation methods set the wrong incentives. While evaluations themselves do not directly cause hallucinations, most evaluations measure model performance in a way that encourages guessing rather than honesty about uncertainty.

They go on to give an example about birthdays. If you ask AI to tell you your birthday, odds are it doesn’t know. But guessing gives it a 1 in 365 chance to get it right, whereas saying “I don’t know” means it would receive “no points” in the teaching environment.

Models are rarely rewarded for abstaining from answering a question (unless specifically trained to do that, which OpenAI is trying to do).

Abstention is exactly what we want in GIS—we don’t want fake answers, we want answers based only on the data provided.

What can be done?

The simple answer is to change how we set the incentives during training. For example, we could penalize confident errors more than unconfident ones (but that might lead to its own set of misalignments).

Some standardized tests for humans do things like this, providing negative points for wrong answers, encouraging you to only pick an answer if you are very sure.

OpenAI further blames the leaderboards, the things we use to compare models. They claim these need to be updated so that they no longer encourage guessing. That might be right, but I think they could probably do more; it sounds a little bit like they are teaching to the test themselves, when, as the market leader, they may have to be the ones to help redefine the test.

In your own code, you can do things like this:

- Copy and paste the exact reference documentation into the chat, or as a reference MD file in your repo. The AI is much better at retrieval than recall.

- Ask it to cite sources. Require that URLs are provided, then click on them to verify the source.

- Give it a way out by adding something like “If you don’t know or can’t find the correct class, say unknown, or suggest a different way to achieve the goal.”

Why this matters

As users of the models, it is important to know how the tool works. Just like we understand the principles of a calculator, we should understand them for the AI models we use. So, knowing why models hallucinate lets us better understand what we can do about it, or at least how to recognize it.

The key takeaway here is this: the less well-known (or less visible on the internet) the fact is, the more likely the AI is to hallucinate.

This is why even the best coding tools like Codebuff or Claude Code hallucinate ArcGIS functions—on the scale of the internet, there are just so many more React & JavaScript examples than there are ArcGIS examples, so it is less likely to get it right.

The good news is that if you are building a tool to do a specific job, like write simple JavaScript prototypes using ArcGIS, you can help the model by giving it more details and reference material in its context window.

For now, we are stuck with some level of hallucination, but I think as we learn more about how to train these models with different incentives, we’ll see how to reduce those. I don’t think they will ever go away, after all, it is trained on human text, and we hallucinate too.

Newsologue

- Google has created a new “Agent Payments Protocol” that lets AI buy stuff for you!

- Agent Swarms write Complex Software - Okay, yes, even my own app builder uses Agents, and we are working on more of these, but this feels like a lot of hype.

- MIT spinoff creates a wearable that can detect “internal articulation of words” (a.k.a. you don’t say them out loud and you don’t have to move your mouth, but you have to be kind of like fake saying them). This sounds really cool because it would make it possible to interact with an AI without typing or speaking out loud, but it sure does look goofy!

Epilogue

The example test question was written by ChatGPT, it also made the answer “upside down” at my request.

My AI editor was really worried about leap years, repeatedly wanting me to update things:

Minor: “1 in 365” (you could say “~1 in 365” to sidestep leap-year pedants).

As with the previous posts, I wrote this post. I learned about this paper recently and though it was interesting, so here we are!

Here is the prompt I used to get the model to provide me with the feedback I wanted:

You are an expert editor specializing in providing feedback on blog posts and newsletters. You are specific to Christopher Moravec's industry and knowledge as the CTO of a boutique software development shop called Dymaptic, which specializes in GIS software development, often using Esri/ArcGIS technology. Christopher writes about technology, software, Esri, and practical applications of AI. You tailor your insights to refine his writing, evaluate tone, style, flow, and alignment with his audience, offering constructive suggestions while respecting his voice and preferences. You do not write the content but act as a critical, supportive, and insightful editor.

Always Identify what is working well and what is not.

For each section, call out what works and what doesn't.

Pay special attention to the overall flow of the document and if the main point is clear or needs to be worked on.

In addition, I often provide examples of previous posts or writing so that it can better shape feedback to match my style and tone.