Episode 39 - GeoAI vs. The Large Language Model: A Tale of Two AIs

This week I recap a presentation I did walking through different ways you can use AI and GIS today.

Prologue

I just finished giving a presentation on GIS and AI at the Coding and Coordinates meetup (If you are in PDX, join us for the next one in February!), and, like the responsible adult I am, I completely forgot to hit record on my camera. So instead of sharing a polished video, I'm doing what any self-respecting CTO would do: I'm recreating it from memory via voice memo and turning it into a newsletter. You're welcome.

The goal of the event was simple: help GIS folks understand that AI isn't some mystical force—it's a tool you can actually use. Like, tomorrow. Today, even. And no, you don't need to become a data scientist to make it work.

GeoAIologue: Two Kinds of AI Walk Into a Bar...

Let's start by defining a few things: GeoAI and Generative AI are not the same thing.

GeoAI (a general term for the integration of AI with spatial data and geospatial technology) is your traditional machine learning stuff—random forests, deep learning models, the works. These are purpose-built tools that do exactly one thing really well. Want to extract boats from satellite imagery? There's a model for that. Cars in parking lots? Yep, there's a model. Impervious surfaces? You guessed it—there's a model.

One exception here is the class of model called Segment Anything (often referred to as SAM). These models are kind of overachievers in the GeoAI world. They combine transformer technology from language models with image technology. To use these, you describe what you want to extract, and they'll do their best. They won't be quite as good as the specialized models, but here's their superpower: they give you actual polygon outputs. They'll draw the outline of that car, not just tell you "hey, there's a car somewhere in here."

Then we have Large Language Models (LLMs)—the ChatGPTs and Claudes of the world. These are the generalists. They can write code, analyze images, extract information, do fuzzy matching on messy data, and about a thousand other things. They can't tell you where in an image something is (no polygons for you), but they can tell you what's there and give you percentages, descriptions, and analysis.

For the remainder of this post, we will primarily discuss LLMs, as that's where things become particularly interesting for everyday GIS work.

What can you actually DO with this?

Here's where it gets fun. LLMs can tackle a bunch of real GIS problems:

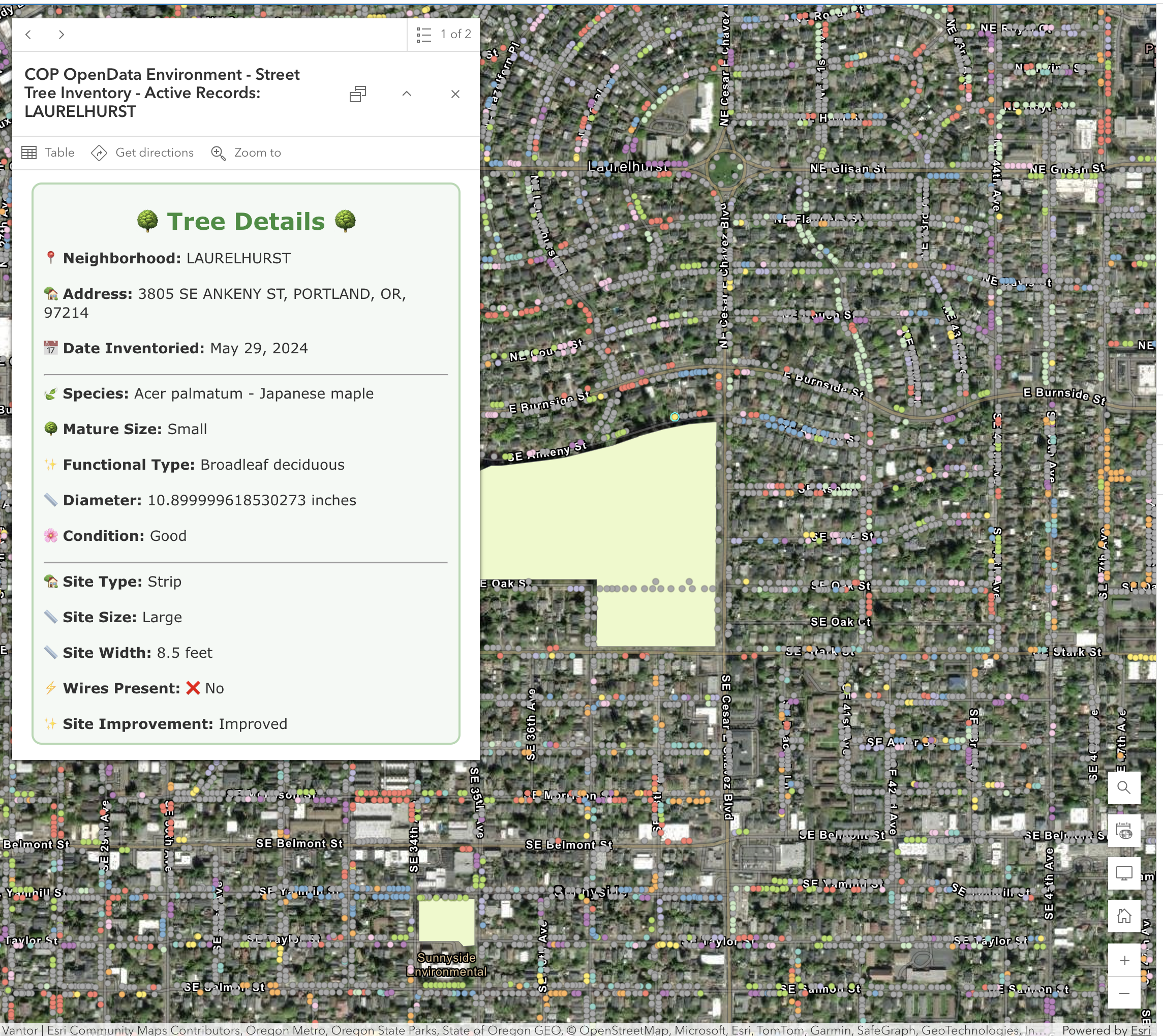

1. Image Analysis—Without a custom model

You can use an LLM to analyze imagery and tell you what's in it. These models will not just tell you "this is a tree," but "this image is 60% grass, 30% trees, 10% impervious surface." After a natural disaster? Feed it parcel imagery and ask it to assess damage: "Is this house destroyed by fire? Flooded? How severe?" I would like to emphasize that these are estimates; if you require exact numbers, you should utilize a GeoAI model that can extract the precise polygons.

One key aspect here is that you define the methodology and output structure in your prompt. You're instructing the AI on exactly which rules to follow and what to extract. You can ask it to provide an explanation or a confidence value, allowing you to easily identify where you might need to pay the most attention.

2. Survey123 Webhooks: The Fantasy Map Generator

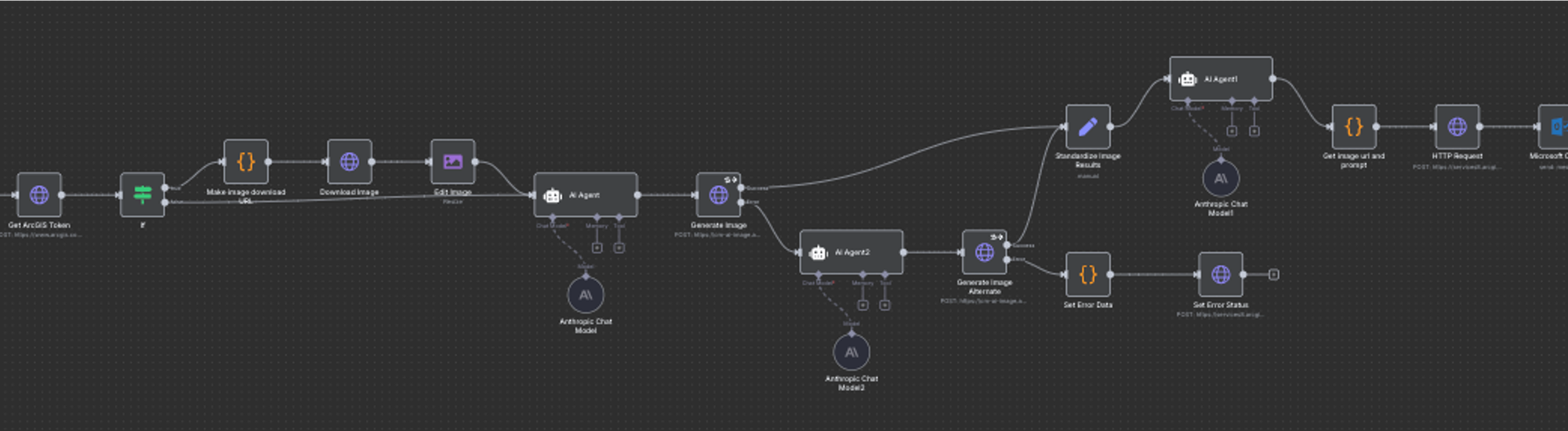

Remember the fantasy map generator? That's Survey123 → webhook → n8n workflow → AI analysis → custom output. Someone fills out a form, and within minutes, they get a personalized fantasy map. I know it is silly, but the underlying architecture can apply to lots of different types of systems:

- Sentiment analysis on public comment submissions

- QC'ing field data as it comes in

- Extracting information from photos of signs or equipment

- Comparing AI assessments with human ratings

3. Fuzzy Data Matching (Or: Why I'll Never Write Another Regex)

If you’ve been working with data for more than an hour, you’ve likely encountered the issue of unclean data. For instance, consider a dataset of game results that states "Roosevelt High vs. the Tigers," and you need to identify which schools are involved. Writing a regular expression for that can be quite challenging.

AIs are good at solving this problem. Provide a list of school names and mascots and ask it to parse each game result into two team names, rating the confidence of the parsing. Just as with image analysis, the confidence value provides valuable feedback on the quality of the result. In my demo (and in the table below), there is a record with a confidence level of zero. Upon examining the data itself, I noticed that the confusion was caused by the game being a Varsity vs. JV matchup.

This trick—asking for confidence levels—works for any extraction task. The AI tries to be honest, and you can use those scores to sort and prioritize what needs human review. When using this trick, I often review records from high, middle, and low confidence levels to see if the AI is on track or not, and make adjustments to my prompt as needed.

4. Code Generation (With Context)

LLMs are surprisingly good at writing Python scripts for ArcGIS, JavaScript for web maps, and even complex Arcade expressions. The keyword here is context. The more you describe your data structure, goals, and constraints, the better the code.

This is why tools like Claude Code (command line) work so well—they can see your entire codebase, not just the snippet you paste into a chat window. Same reason the ArcGIS Arcade Assistant is so useful: it already knows about your layers and fields.

Esri’s AI Assistants

Speaking of the Arcade Assistant, here are the assistants that ArcGIS gives you:

- ArcGIS Arcade assistant (my demo favorite)

- Survey123 assistant (my actual favorite)

- ArcGIS Pro assistant

- StoryMaps assistant

- Business Analyst assistant

- Teams assistant

- Hub assistant

- Documentation assistant

- Translation assistant

All of the AI assistants are off by default; you can turn them on for your ArcGIS Online organization in the settings page.

My Secret Survey123 Trick

The Survey123 assistant will help you generate a form. (One catch: it cannot edit forms that are already created.) The really useful part for me, though, is that it will create a feature service for this form to store the collected data. Since it is a real-life feature service, we can use it for almost anything!

When I need a new feature service to consume in a web app or to load data into, I reach for the Survey123 Assistant to build that feature service for me (even if I’m not going to use Survey123. I might be using Field Maps or a custom app instead). The assistant knows everything about forms, including fields, data types, domains, and even relationships, and all of this maps directly to a feature service. This is essentially an AI-powered database generator.

My Top 5 Tips

My top tips for getting good results:

- Use frontier models. ChatGPT, Claude, Gemini—these are the best models available. If you can only pick one right now, pick Claude. It's the best at code and the most flexible in conversation.

- Talk to it like a human. Describe the problem and what you want it to do, and how you want it to respond. Use Jargon—if you’re working in GIS, use GIS terms. The LLM has a huge knowledge space and jargon helps focus it on the right part of its “brain.”

- Give it context. Tell it about your layers, your fields, what you're trying to accomplish. More context = better results. This can be as simple as copying and pasting the JSON metadata about a layer.

- Start simple, then build. Look for automation opportunities that you wouldn’t have attempted without AI, but are simple to do, like extracting text or condition from images, validating data collection or sentiment analysis. You don’t have to start with a Chatbot to get value out of AI.

- Be transparent about using AI. No Secret Cyborgs

Newsologue

- GPT 5.1 came out this week. I have not noticed much of a difference myself. I hope it is better at writing code. I used GPT 5 to write some Python this week and I regretted not using Claude.

- Mohith Shrivastava from Salesforce has this quote ["A generic AI agent can't grasp your company's unique processes, but a context-aware tool can act as a powerful pair programmer, helping a developer draft complex logic or model data with greater speed and accuracy,”], which I think is spot-on today. However, coding agents make me very, very productive. For example, the image analysis example I shared above is a real app, that I vibe coded in about 30 minutes. Using AI agents to write code is a skill you have to learn.

- Google provides a “File Search” API that makes it so you don’t have to do RAG on your own documents, that could be very, very powerful if it works well (they are the king of search after all).

Epilogue

AI tools aren't perfect. They're not going to replace your expertise today. But they can accomplish in minutes what used to take hours, and they can handle the tedious tasks so you can focus on the more interesting problems.

The barrier to entry isn't "learn to be a data scientist." It's "learn to describe your problem clearly." If you can do that, you can use these tools today.

This week, I used Claude to write the first draft of this post, drawing on a voice memo recap of the presentation, the original outline of the presentation, and various versions of the slide deck that I created using AI along the way. I then rewrote approximately 40% of what it created. It captured my voice and style, but in an extreme way, almost a caricature of them!

If you want to chat about any of this, hit me up. And if you're organizing a conference and want someone to give a presentation where the demos might fail spectacularly but the architecture is sound, you know where to find me.