Episode 48 - Pass the Note

Large Language Models don’t actually "remember" your conversations. Every message you send includes the entire conversation so far. When that gets too long, applications have to make choices about what to keep, and sometimes that goes sideways.

Prologue

Last week, I asked ChatGPT a question. It answered, like normal. Then I asked a follow-up question about something different. It answered that too. But then it also answered the first question again. And again. Every single response, no matter what I asked, kept returning to that original question like a jukebox stuck on repeat.

I’ve heard this from others, too. Something in the way ChatGPT manages conversations is causing it to get “stuck.” To understand why that might happen, we need to talk about something most of us never think about: the context window.

Noteologue

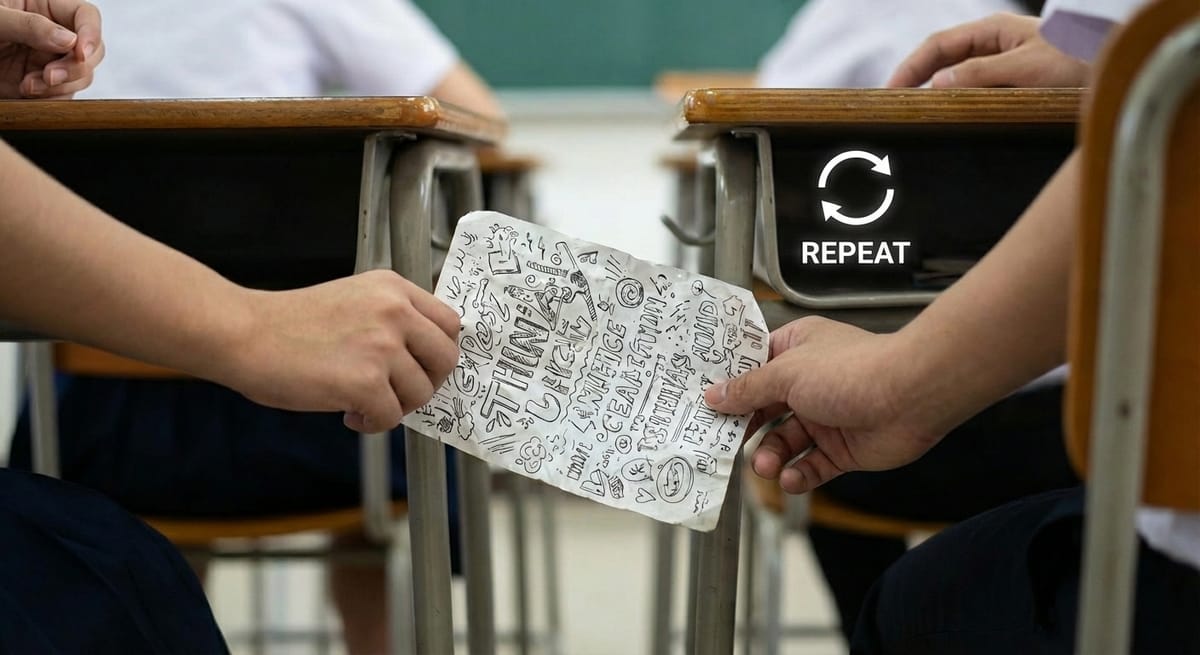

Remember passing notes in school? You’d take a piece of paper, write something on it, fold it up, and slide it to your friend. They unfold it, read what you wrote, write a reply, fold it back up, and pass it back. You unfold it, read it, add your response, fold, pass—back and forth.

Eventually, the paper fills up (assuming you kept it hidden from the teacher that long). You’ve folded and unfolded it so many times that it’s as soft as cloth, covered in handwriting on both sides, and there’s nowhere left to write.

That’s the context window.

Every time you send a message to Claude, ChatGPT, Gemini, you name it, you’re not just sending that one message; you are passing the entire note. Every previous exchange, every response, the whole conversation goes back to the model. It unfolds the paper, reads everything from the beginning, writes its reply at the bottom, and passes it back.

The model doesn’t remember your conversation. It re-reads the entire note every single time.

This is what we mean when we say the API is “stateless.” The model itself has no memory between requests. What feels like a continuous conversation is actually the application re-sending the full note each time.

Just like that piece of paper in schools, there is only so much space. Claude’s context window is 200 thousand tokens as of writing. That’s something like 300 pages of written text.

300 Pages is so much!

Yeah, 300 pages of text is a lot, but it gets used up fast. The models usually write a lot, and all their thinking also takes up tokens. So does tool usage, like doing a web search. When using a tool like Claude Code, it can consume a lot of context just by reading existing code files.

So all good things eventually come to an end.

What happens when the note fills up?

Out of Context

There are several ways to handle a context that is too large, or even a document that is too large to read in a single context window. The context window is like our note; it can only hold so much. We can fill it up by having a long conversation or by adding other content, like documents, source code, etc., anything the AI might use as a reference in its response.

Option 1: Summarize the old note

This is what Claude Desktop and Claude Code do. When the conversation gets too long, they “compact” the older parts. This is basically doing a summarization process. All the back-and-forth from early on gets condensed into a paragraph or two that captures the key points. Then the conversation continues on the fresh “paper” with more room to write.

It’s like writing “we debated pizza vs tacos for lunch for fifteen minutes and eventually decided on tacos” instead of copying all fifteen minutes of the debate. It’s accurate, but might lose that funny thing you said.

Option 2: Copy the parts you think are important

This seems to be closer to what ChatGPT does. Instead of summarizing everything, it selectively pulls in the parts of the conversation it thinks are most relevant to your current message. The old notes go in a pile, and when you ask for something new, it rifles through them and grabs what seems related.

This is powerful when it works. But when it misfires, as it did for me, the system keeps pulling the same information over and over, convinced it's still relevant, even when you've moved on.

Neither approach is perfect. Summarizing can lose the context you actually needed. Selective copying can get stuck on the wrong thing. Both are clever engineering solutions to a fundamental constraint: the paper has a size limit.

Memory

If this process sounds familiar, it might be because we discussed something similar in Episode 29, where I explained that models don’t learn, but they sure look like they do.

The key insight is the same here: the model itself never changes during your conversation. Instead, it’s the application (Claude Desktop, ChatGPT, whatever) that does clever tricks to make it feel like the AI remembers things.

When Claude "remembers" that you prefer a certain coding style, or that you work in GIS, that's not because the model learned it. It's because the application saved that information somewhere and writes it at the top of every note before passing it to the model.

Same thing with conversation history. The model isn't remembering what you said ten messages ago. The application is just copying those ten messages onto the note every time.

Understanding this changes how you use these tools. If something feels "stuck," it might be the app's note management misfiring, not the AI being stubborn. If the AI suddenly "forgets" something important, it might be because that part was summarized away or not copied to the new note.

The model just reads what's on the paper passed to it.

Leaky Memory

While editing, Holly asked me a question I wanted to at least mention. She noticed that sometimes when talking to one instance of Claude, it has access to other chats. It does!

This process works similarly to Option 2 above: copying what the model considers important. ChatGPT seems to do this automatically—extending the context with additional information from other chats. Claude, on the other hand, seems to treat this as an action; the model can decide to search the other chats.

This process relies on semantic relationships, which I talked about back in Episode 9 where we compared embedding to geocoding.

Newsologue

- Anthropic Launches Cowork - I think this is pretty big, Claude Code is a very effective programmer, but it is also a very effective… worker. CoWork intends to bring that capability to a nice GUI so you don’t have to deal with the command line. I’m eager to see how some of our non-developer staff at dymaptic make use of this.

- Everyone Launches a “Health” App - Both OpenAI and Anthropic launch an AI app that is HIPPA compliant that can… answer health questions? I haven’t tried it yet, but regular Claude is pretty good at health questions, so this might be ok. But there also might be so many constraints and controls on it that it isn’t very useful.

- Some monkeys broke out of the

jailzoo - The real issue here is that animal control had trouble finding them because reports on social media were a mix of real images and AI made up ones, and sometimes they couldn’t tell the difference. Don't make deep fakes.

Epilogue

This post started as a conversation in the kitchen (as they sometimes do) with a couple of friends, trying to explain what “context” is. That turned into a brain dump into Claude, which cooked up the note-passing metaphor, but failed to execute it. I took it from there, then Holly edited.

I’ve written about this a few times now, context windows, because I think it is an important factor in understanding how these models behave when you use them.