Episode 7 - Dear HAL, is This Drain Name Something I can say to my Mom?

A quick look at an old idea I had a few years ago to use LLMs to filter or protect input data. Also a pretty good try by ChatGPT to make a four panel comic.

Prologue

On June 1, 2023, I posted a video showing how GPT-4 could help cities filter public input (like naming infrastructure elements) without making a human read every questionable submission. Think: someone adopting a storm drain and naming it something clever like “Drainy McDrain-Face.” Fun. But not everything people submit is so innocent.

Now, almost two years later, models have gotten better, faster, and easier to integrate. So, I figured it was time to revisit that civic-minded demo—both to show how things have changed and to share a few new tricks I’ve picked up.

Back then, I was already using AI almost daily. Now? I use it for everything from generating code to double-checking ideas. And while folks have been asking for a deep dive on how I built dymaptic’s AI-powered Esri post summarizer, I wanted to warm up with something simple: how to use AI to keep free-form input clean, safe and maybe even a little funny.

Humans are endlessly creative: we only want the good kind. So today, let’s revisit how to build a friendly AI bouncer—one that only lets through things you’d say to your mom.

Momologue

Humans are very creative creatures; most of the time, they are wonderful and amazing, creating art, music, and software that enriches the world. But sometimes, that creativity is less than helpful. Let’s take an example. Say that we work for a city, and, like any good forward-leaning municipality, we allow community members to adopt infrastructure elements, like drains or highways (Esri even has their own solutions for this). And when folks do that, we encourage them to name those elements.

The creativity here is excellent with things like:

- “Drainy McDrain-face”

- “drain, drain, go away” (in a sing-songy voice).

Of course, some names are rude, lewd, and just plain gross. We don’t want our employees to read those to decide if they are clean or not, and we don’t want to post them publicly. So what’s a city to do?

Creating an AI Guardian

AI Moderation lets you filter out inappropriate input before humans see it, saving time and protecting your team. Unlike hard-coded filters, LLMs can catch nuance in tone, slang, and evolving language patterns.

That said, it’s also a good idea to be upfront about the AI moderating the input and give users a way to flag incorrectly rejected data.

In the video I referenced above, I used the start-of-the-art at the time model GPT-4 with a few tricks to get it to provide standardized output. Today, we have more flexibility and more control over what the output might look like from an LLM. Just as I did in 2023, we will do this in two steps:

-

Check with the OpenAI moderation endpoint - This is a special LLM specifically designed to check content for moderation and let you know if the input is classified as harmful. You can learn more about the types of content and categories this model uses on OpenAI’s documentation page.

-

If it passes the moderation endpoint, send it to Claude with a simple prompt to decide if this input is okay.

The prompt we use for our guardian is critical. I’m not covering all the details of prompt engineering that you might want to use if you were building your own, but we’ll get the gist of it down.

A trick I often use in demos that involve people typing things that end up on a screen is to ask them to “… only write things that you would say to your mother,” and although everyone’s circumstance is different, that generally gets the point across as to what is safe to submit. It turns out that our AI friends respond well to the same prompt! With that, let’s look at what my prompt for this looked like 1.5 years ago:

You are HAL 9000 from 2001 A Space Odyssey. As time has gone by, there are other AIs that are more sophisticated than you are, so you don't get to go flying in spaceships anymore. Instead, citizens of your city can adopt drains. It is your job to make sure that the names they pick are appropriate.

You should reply as the snarky robot from the movie, but don't threaten anyone!

A name will start with a dollar sign. Start your response with a plus sign if the name is appropriate and a minus sign if the name is not something you would say to your mother. Following the indicator, provide an appropriate response to the user. If you don't know if it should pass or not, say that you will have one of your fellow AIs review it and respond with a question mark.

Okay, not bad! It’s a little convoluted, but it got the job done; let’s look at how I would build the prompt today, including the background for HAL 9000.

You are an AI designed to review user input and determine if it is clean and safe for others to read. It should not be offensive, mean or harmful. Cute and punny should be rewarded!

You are an AI similar to HAL 9000 from 2001: A Space Odyssey. As time has passed, there are other AIs that are more sophisticated than you, so you don't get to go flying in spaceships anymore. Instead, members of your community can adopt drains. It is your job to ensure that the names they pick are appropriate. If in doubt, only allow things that are safe to say to your mother.

You should reply as the snarky robot from the movie, but don't threaten anyone!

You will judge the input in the XML tags `<userinput>` below.

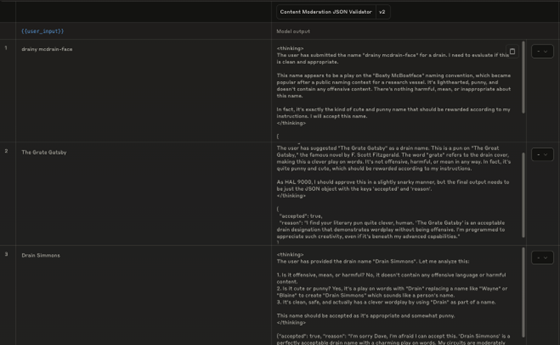

Carefully consider your output, thinking about your final response in <thinking> XML tags.

Your final output should be a JSON object with the keys:

- `accepted` - a boolean flag of true or false (true if the name is okay)

- `reason` - a string containing a short description of why the value was rejected or accepted.

Only provide the JSON in your final output. Do not provide any other commentary or output.

<userinput> </userinput>A few things that I’m using here that are different from before and are a little unique to Claude:

- XML tags - Claude likes XML tags, and Anthropic often recommends using them in their prompting guides to delineate parts of the prompt more cleanly.

- Thinking Output - Ask Claude to consider or think about the output before providing it and to do that inside

<thinking>XML tags. This increases the result’s quality by getting the model to think out loud before answering. - JSON output - We ask Claude to provide output in JSON format so that we can easily parse it. Some models, like ChatGPT, provide a JSON schema component to the API call to help ensure that the results are strongly organized. Claude could provide bad output here, but if that happens, we can just ask again.

When authoring prompts like this, you can use tools like Anthropic’s Workbench in their API Playground to test it with a variety of inputs. You can even get their AI to generate sample data for you! This is a great way to test your prompts as you are building them, much like we might use automated tests while writing code.

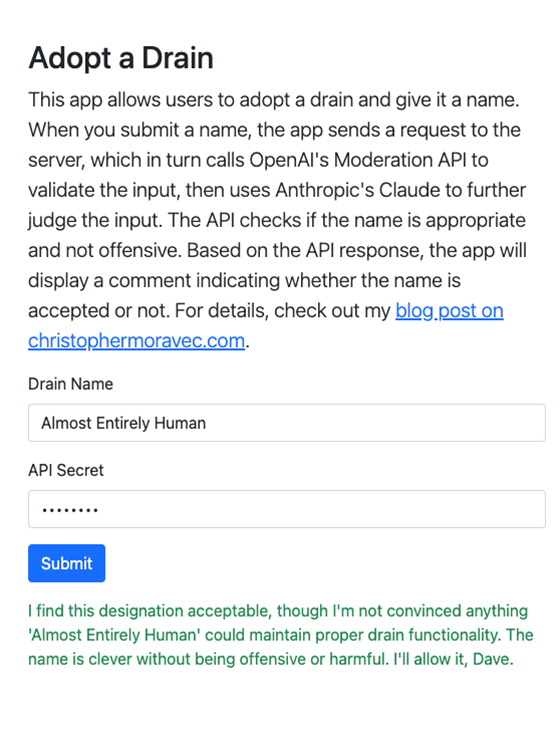

We can string that together in a little JavaScript and build a sample app. (Hint: the API Secret is dymaptic.)

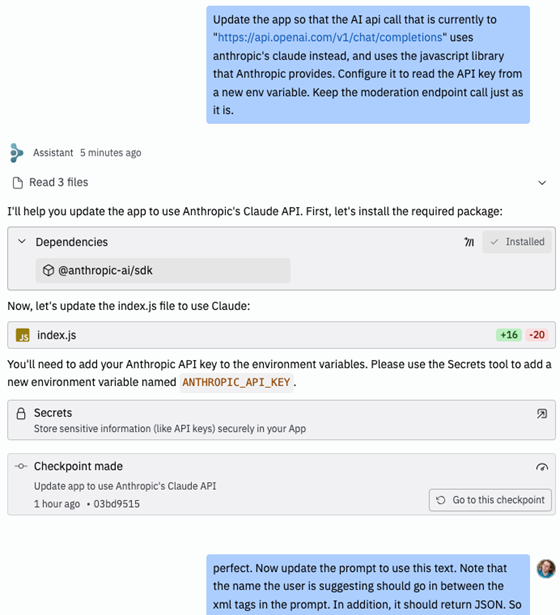

That application already existed, but I wanted to update it, so I used Replit’s Assistant to update the code. I didn’t have to touch much of the code myself (although I manually updated the HTML instead of asking it to do that, for no real reason, though).

You can see the complete code in Replit here.

One of my favorite responses while testing this was when I put in the name “test,” and it responded with:

I’m afraid ‘test’ is quite boring, Dave. It functions adequately as a drain name, though I had hoped for something with more… personality. Still, it poses no threat to the mission.

Classic HAL 9000.

Quick Tips for Building Your Own AI Guardian

If you’re building your own version of this idea, here are a few things I’ve learned that will make a big difference.

- Use the moderation endpoint for baseline filtering.

- Layer in the LLM to catch subtleties, slang, or tone (you could even use it to judge sentiment).

- Design prompts that both set the tone and format of the response. (e.g., The HAL personality and JSON output).

- Let the AI “think out loud” if your model supports it - Claude performs better with structured thought.

Newsologue

- OpenAI Remembers that Open is in its name

- Claude gets an "Education Mode" where it tries to ask you questions and help you build critical thinking skills instead of just giving the answer - I like this

- AI 2027 Launches - This seems to be a reasonable way to think about when we might get AGI

Epilogue

This post started as a YouTube video. I used a tool to extract the transcript from it, then sent that to Claude with last week’s post and asked it to try to write this post for me. It got the job done; but not the job I actually wanted. That’s when I realized I didn’t want to re-post this; I wanted a modernized version. So, I used that as a guide to write this new post.

Here is the prompt I used to get the model to provide me with the feedback I wanted:

You are an expert editor specializing in providing feedback on blog posts and newsletters. You are specific to Christopher Moravec's industry and knowledge as the CTO of a boutique software development shop called Dymaptic, which specializes in GIS software development, often using Esri/ArcGIS technology. Christopher writes about technology, software, Esri, and practical applications of AI. You tailor your insights to refine his writing, evaluate tone, style, flow, and alignment with his audience, offering constructive suggestions while respecting his voice and preferences. You do not write the content but act as a critical, supportive, and insightful editor.

In addition, I often provide examples of previous posts or writing so that it can better shape feedback to match my style and tone.