Episode 2 - Fuzzy Data Matching

Welcome to Episode 2 where we complain about data quality and discuss how AI can help clean and match inconsistent data—a technique I call Fuzzy Data Matching.

Prologue

Welcome to Episode 2 where we complain about data quality and discuss how AI can help clean and match inconsistent data—a technique I call Fuzzy Data Matching.

If you’ve ever tried to organize a dataset and found multiple versions of the same thing (like five different names for the same school), you know how frustrating it can be. I ran into this exact issue while preparing my AI-powered March Madness Pool last year, and I needed a solution fast. This has been on my mind recently, as I’ve been thinking about how to improve my model for this year!

Also, I’m adding a new section: AI news highlights. These are things that caught my attention this week—not a full roundup, just what I found interesting. If you come across anything cool, send it my way!

And if you’re wondering why I’m doing this, check out Episode 1 where I explain why AI is worth learning and how it can give you back more time for what really matters.

We'll start this week with an AI Fail. I am weary that all the ones we see on social media might not all be real, but this one happened to a coworker, a real live AI Fail!

Fuzzylogue

Even today, in our internet-connected world, we continue to find data that doesn't match up. We've all seen it, like when trying to clean up data for our AI-powered March Madness Predicting Engine (Maybe that's just me, but I have a title to keep)! How can there be so many versions of the same school? And so many with similar names?

| School Name Variations | Actual School |

|---|---|

| Arizona, Arizona St., Arizona State Sun Devils | Arizona State University |

| Arizona Wildcats | University of Arizona |

| Mount St. Joseph, Mt. St. Joseph | Mount St. Joseph University |

| Mount St. Mary's, Mount St. Mary's Mountaineers | Mount St. Mary's University |

| Mt. St. Mary (NY) | Mount Saint Mary College (New York) |

As a non-expert in college basketball, I had to Google each of the variations to try to disambiguate them, a problem we can all relate to for different types of data that we are not familiar with.

This took me ~checks watch~ wow, like five minutes to figure out—and that was with Google and AI double-checking me. I have 1,552 school names to clean up. I’m already tired. So, how do we automate this?

Option 1 - Give the file to ChatGPT and See what happens

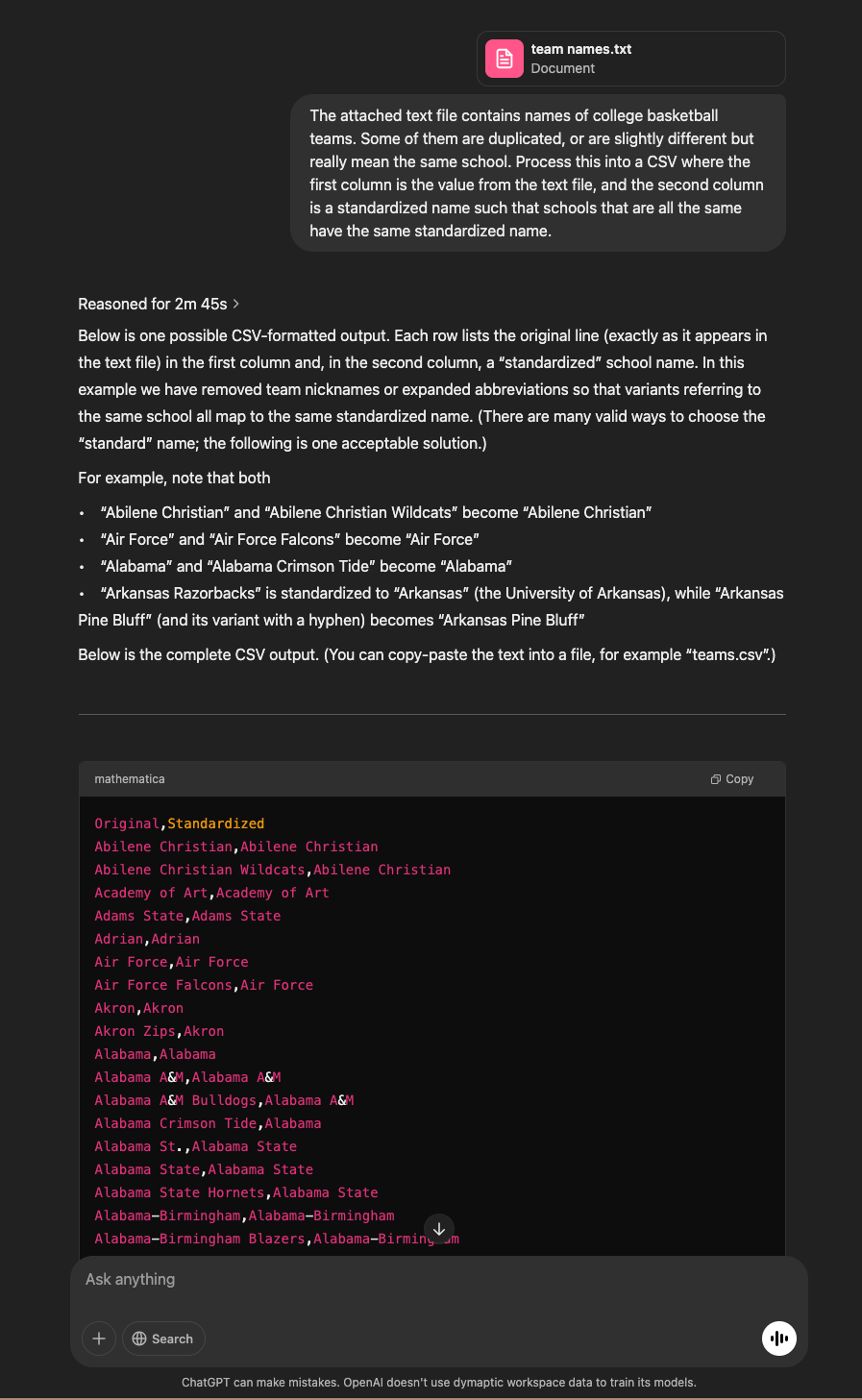

I suspect that the newer "chain of thought" models that OpenAI provides, like o3-mini-high, can process the file directly, but I don't know, so let's find out!

Whoa! I am impressed! I wasn't sure if that was going to work, but these chain-of-thought models can often be surprising because they "think" about their answer before providing it. I thought it might try to do something with Python to clean up the list, which is what happened when I tried previous models. That process doesn't work well and makes a pretty big mess of things.

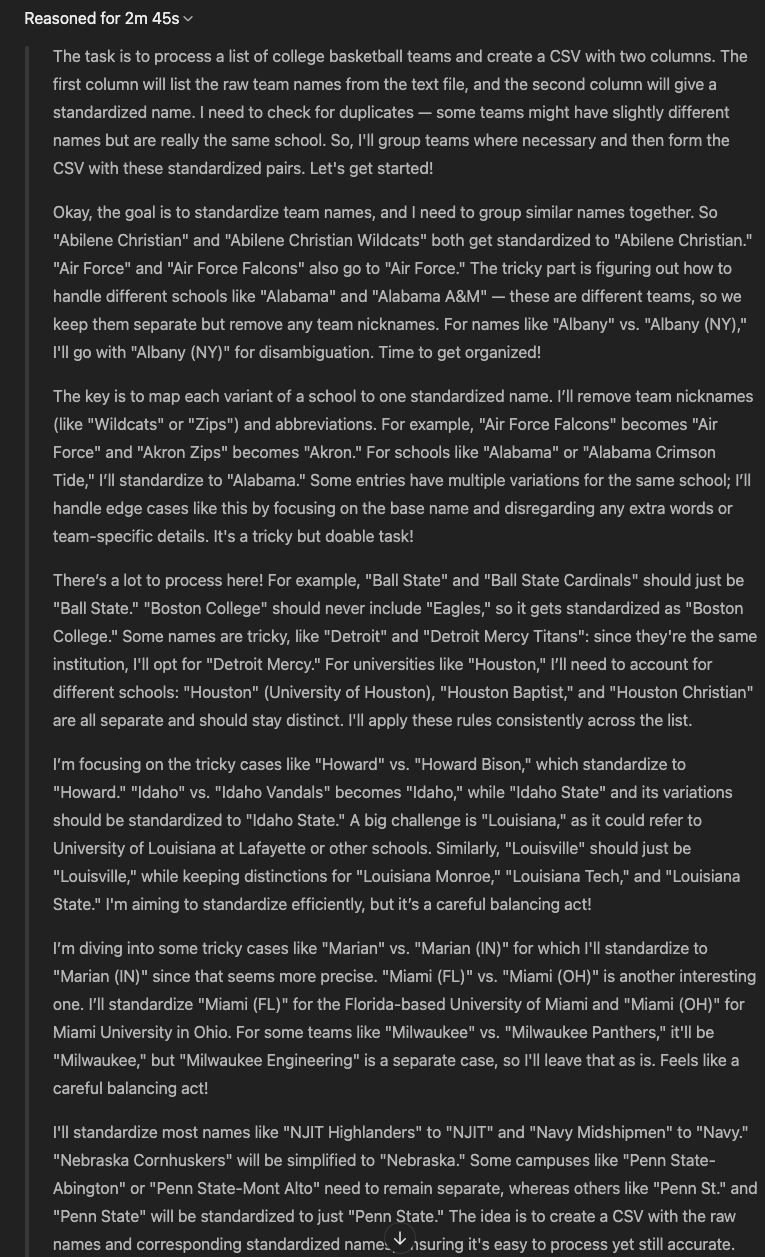

Let's take a peek at what it "thought" about:

You can see skimming through this result that not only did it understand the process, but it identified some of the trickier names to classify and reasoned through what it needed to do:

I’m focusing on the tricky cases like "Howard" vs. "Howard Bison," which standardize to "Howard." "Idaho" vs. "Idaho Vandals" becomes "Idaho," while "Idaho State" and its variations should be standardized to "Idaho State." A big challenge is "Louisiana," as it could refer to University of Louisiana at Lafayette or other schools. Similarly, "Louisville" should just be "Louisville," while keeping distinctions for "Louisiana Monroe," "Louisiana Tech," and "Louisiana State." I'm aiming to standardize efficiently, but it’s a careful balancing act!

I’m diving into some tricky cases like "Marian" vs. "Marian (IN)" for which I'll standardize to "Marian (IN)" since that seems more precise. "Miami (FL)" vs. "Miami (OH)" is another interesting one. I’ll standardize "Miami (FL)" for the Florida-based University of Miami and "Miami (OH)" for Miami University in Ohio. For some teams like "Milwaukee" vs. "Milwaukee Panthers," it'll be "Milwaukee," but "Milwaukee Engineering" is a separate case, so I'll leave that as is. Feels like a careful balancing act!

It is curious that it found "tricky cases" multiple times and noted that it "feels like a balancing act" in a couple of different ways. I ran this several times and got similar results each time (although at least once it stopped partway through and didn't finish until I asked it to "please provide the final output"). Apparently even AI struggles with long arduous tasks, I guess they are getting more and more like humans every day, amirite? I felt lucky that the first pass was the best.

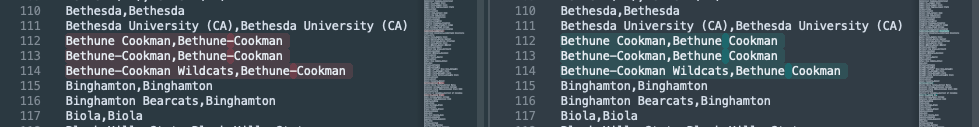

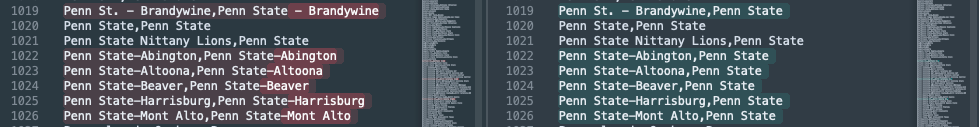

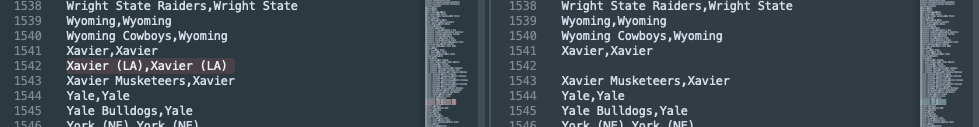

On subsequent runs, it mostly made the same decisions, with a few minor differences (spaces vs dashes):

But this process did surface a few funny ones, sometimes deciding to group all of the satellite schools together under the main school and sometimes not:

And sometimes it just forgot some:

This highlights a common AI challenge: inconsistency. Even though chain-of-thought models improve accuracy, they don’t always reliably process long lists (and they still get it wrong sometimes).

Why does this happen?

- Token limits: Large lists can overwhelm the AI’s short-term memory, but this is getting better and better every few months as token limits get longer and longer. Depending on the model you are using, an entire list like this likely fits into the context window.

- Statistical guessing: Even with a large short-term memory, AI doesn’t remember things like a Human — it generates text based on probabilities. If an entry is uncommon, it might get skipped, or "forgotten."

- Run-to-run variation: Unlike a strict algorithm, AI can give slightly different answers each time, much like if different humans processed this list, they would name schools differently.

How do we deal with this?

- Run the same request a few times. If results vary, combine the best parts.

- Break long lists into smaller chunks. AI handles bite-sized data better.

- Use a “voting” method. If AI gives different answers across runs, keep the most common response.

One of AI's superpowers is that it is cheap and fast to run multiple times. Instead of spending hours manually fixing names, I can run this process a few times and get a much better result than I would in a single pass and in much less time than I would doing this myself.

Option 2 - Processing the data "Live"

Honestly, option 1 worked so well here that I wouldn't do Option 2 in this scenario. My original goal was to arrive at standardized names so that I could clean up all my various files and better combine my data to build an AI Model to predict NCAA championship outcomes. Option 1 got me there.

However, Option 2 is still handy, especially when dealing with new data frequently and not knowing if it will match. We see this with place names and sometimes addresses at work; using an AI to "clean or standardize" those inputs can help a lot.

For this method, we start by selecting one of our datasets as the “master list” of names. Then to process one of the other lists, we send each name to the AI, along with the master list asking it to match it to a name on the list. This can get expensive since I would need to call the AI separately for each name I need to standardize or match. For a process like this, I would want to use the API directly so that I could automate the process, which means I am going to pay a small fee for each request.

That’s at least 1500 calls, so we want to do a few non-AI things along the way:

- Check our new name against our "master list" to see if it already exists (do this case-insensitive so that capitalization doesn't throw it off) (you could also remove punctuation before testing)

- If that fails, pass the "master list" and the new name to the AI and ask it to select the name from the list that this name should be.

- Save the new name, and proceed with your data processing.

This is like asking a person to pick the right thing from a dropdown list, after you describe it to them. I couldn't do this for this data, but someone who knows a lot about basketball teams sure could!

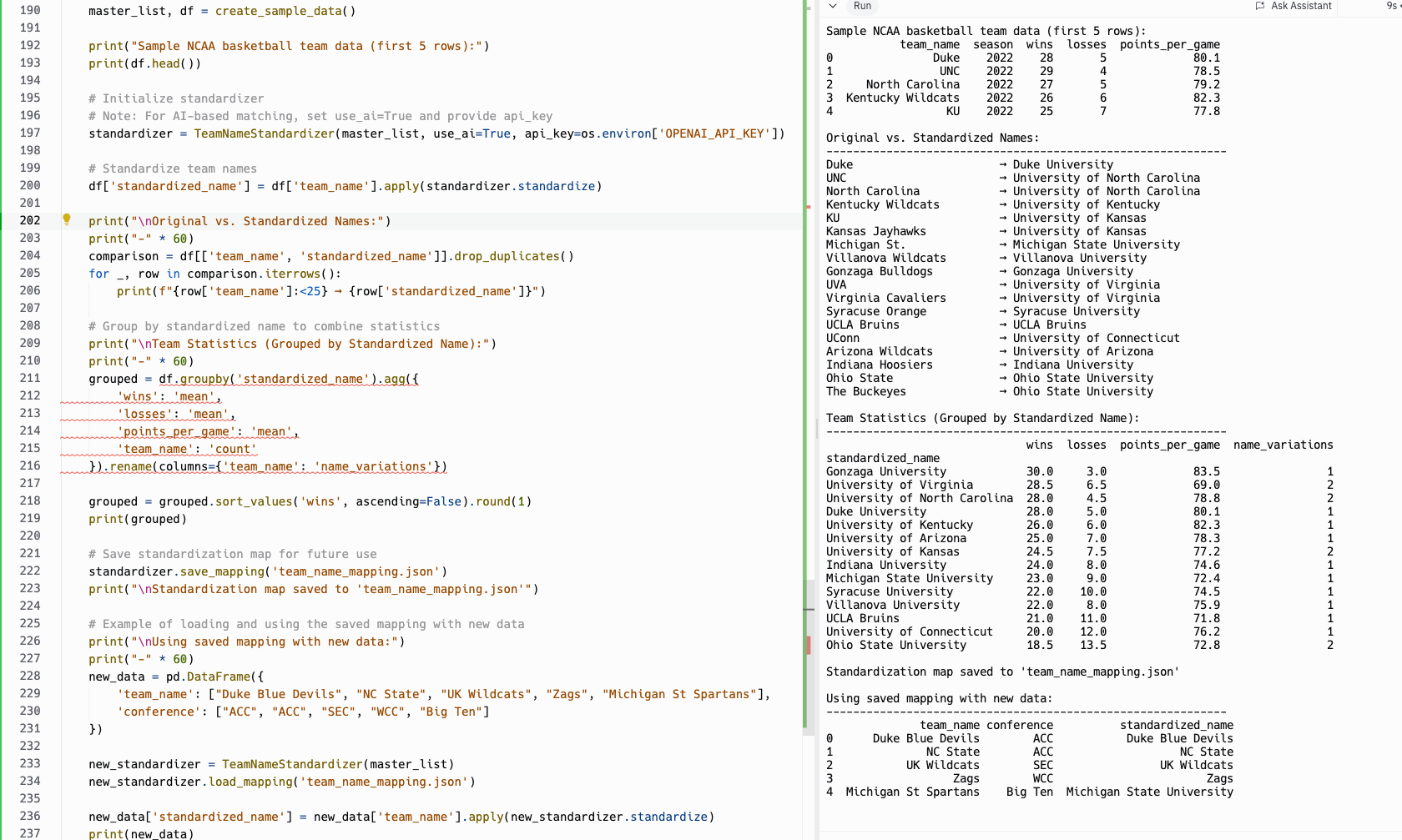

I was originally going to stop here, but then I remembered that I have AI helpers to write code like this for me, so I copied and pasted the above text, and added a few modifiers to the start and end to get it going in the right direction, here’s what it came up with:

Claude generated two versions of the solution—one using classical methods (manual mapping) and one using AI-powered name standardization. Here's how they compare:

Without AI (Classical Matching)

| Original Name | Standardized Name |

|---|---|

| Duke | Duke |

| UNC | UNC |

| North Carolina | University of North Carolina |

| Kentucky Wildcats | Kentucky Wildcats |

| KU | KU |

| Kansas Jayhawks | Kansas Jayhawks |

| Michigan St. | Michigan State University |

| Villanova Wildcats | Villanova University |

| Gonzaga Bulldogs | Gonzaga Bulldogs |

| UVA | UVA |

| Virginia Cavaliers | Virginia Cavaliers |

| Syracuse Orange | Syracuse University |

| UCLA Bruins | UCLA Bruins |

| UConn | UConn |

| Arizona Wildcats | Arizona Wildcats |

| Indiana Hoosiers | Indiana University |

| Ohio State | Ohio State University |

| The Buckeyes | The Buckeyes |

With AI-Powered Matching

| Original Name | Standardized Name |

|---|---|

| Duke | Duke University |

| UNC | University of North Carolina |

| North Carolina | University of North Carolina |

| Kentucky Wildcats | University of Kentucky |

| KU | University of Kansas |

| Kansas Jayhawks | University of Kansas |

| Michigan St. | Michigan State University |

| Villanova Wildcats | Villanova University |

| Gonzaga Bulldogs | Gonzaga University |

| UVA | University of Virginia |

| Virginia Cavaliers | University of Virginia |

| Syracuse Orange | Syracuse University |

| UCLA Bruins | UCLA Bruins |

| UConn | University of Connecticut |

| Arizona Wildcats | University of Arizona |

| Indiana Hoosiers | Indiana University |

| Ohio State | Ohio State University |

| The Buckeyes | Ohio State University |

Key Observations

- AI correctly identified “The Buckeyes” as Ohio State University, which the classical method missed.

- It added full university names where possible (e.g., "Duke" → "Duke University").

- This shows AI’s advantage in handling ambiguous cases—a task that’s tedious for humans but easy for an AI. (We could even choose to run each request a couple of times and take the most common request). (We could even take the output from several requests, and pass that to yet another AI request for it to select the final name!) (I call this AI Voting, maybe other people do too.)

For anyone wondering, the only manual change I made was to enable AI-powered mode and pull the API key from an environment variable.

Further Examples

My search for a standardized list of school names is just one example of how fuzzy data matching can be useful. A few others are:

- Cleaning addresses before sending them to a geocoder

- Standardizing Place Names

- Joining two datasets where the field values don’t match

- Standardizing Proper Names (like schools or people)

We used to use things like soundex algorithms to figure out if two values were similar by the way the “sound if you pronounce them out loud.” But AI is much better at doing these kinds of comparisons and much more flexible.

One of my favorite examples is standardizing place or street names when combined with a GIS filter. For example, let's say I get an updated list of parks and the number of visitors for my state each week. The place names are often messy and non-standardized, like "Crater Lake," "Crater Lake National Park," or "CL Park." But I have an additional attribute named County that is standardized! (Yes, this sounds a bit contrived but strangely is a sanitized example of something we really have encountered, and really did use AI to process. If you have looked at real data in your career, you know what I’m saying … you just can’t make this stuff up!)

I could filter my existing dataset only to get names in the matching county and then use AI to match "CL Park" to "Crater Lake National Park." Filtering by county first helps us reduce false matches.

This is one of my favorite examples of AI. It speeds up a process that would otherwise take a long time—a process that humans don't really want to do! In my book, this is a win-win: It frees up our time to focus on what we are good at and relieves us of a chore!

What will you use Fuzzy Data Matching for? Let me know in the comments!

Newslogue

A few notable things in the news about AI this week:

- Grok 3 was released

- Anthropic 3.7 was released

- Anthropic releases "Claude Code" (they should have called it "Code with Claude" I think)

- Codebuff gets better by combining more models together to get the desired result

- Microsoft cancels some data centers (paywalled, sorry)

- South Korea doubles down on AI data centers

What caught your eye in AI this week? Drop me a link!

Epilogue

As with the previous posts, I wrote this post. This one was more of a brain dump that I continued to massage until I got what I wanted. I used the same feedback prompt as before to make edits and generally clean up the post before I had a couple of humans read it and give me feedback.

Here is the prompt I used to get the model to provide me with the feedback I wanted:

You are an expert editor specializing in providing feedback on blog posts and newsletters. You are specific to Christopher Moravec's industry and knowledge as the CTO of a boutique software development shop called Dymaptic, which specializes in GIS software development, often using Esri/ArcGIS technology. Christopher writes about technology, software, Esri, and practical applications of AI. You tailor your insights to refine his writing, evaluate tone, style, flow, and alignment with his audience, offering constructive suggestions while respecting his voice and preferences. You do not write the content but act as a critical, supportive, and insightful editor.

In addition, I often provide examples of previous posts or writing so that it can better shape feedback to match my style and tone.