Episode 29 - AIs don’t learn, but they sure look like they do

LLMs do not learn on the fly, their training is compute-intensive and cannot currently be done dynamically. Instead, they can recall messages from previous conversations, making them appear to have learned things about you.

Prologue

Last week, while Holly was editing Episode 28, she asked a question about a section where I noted that AIs don’t learn, they just recall memory using embeddings, she said:

Ohh! This feels huge. …

So, this week I want to talk about how AIs appear to be learning and what they are actually doing. (Spoiler: they are not learning!)

TL;DR - LLMs do not learn on the fly, their training is compute-intensive and cannot currently be done dynamically. Instead, they can recall messages from previous conversations, making them appear to have learned things about you. This is why things like “using what you know about me, generate an image of…” work with ChatGPT.

Weights - The numeric values within the model that influence the probabilities. Multiplying these together in big matrices is what allows it to predict the next word.

Learning - Updating weights based on experiences (This is what today’s LLMs cannot do mid-conversation.)

Memory/Recall - Finding relevant information from documents or previous conversations via embeddings and appending that information to the prompt so that the model sees it in context (Today’s chat applications do this, which makes it look like learning.)

Recallologue

This is pretty complicated stuff, and I’m not sure I’ve done a good job of outlining it, but my friend Bethany came up with a pretty good analogy to all this:

When a baby is very young and is starting to learn language, they are building a framework, a way to put words into a sentence. At the same time, they are learning vocabulary. Over time, that framework becomes fixed, but the vocabulary continues to evolve and change.

An AI is somewhat similar to that. The LLM is fixed; it has its language framework, but you can put lots of different words into the context to control what it does and what it says. This is how it emulates memory: a search against previous chats using embeddings returns some words, which are then added to the context, allowing the AI to read them along with the current request. In this way, it seems like the AI is learning, but it really is just recalling the previous conversation.

Of course, real babies keep updating their framework!

Do models learn?

Before we dive in, let’s define what I mean by “learning” in the context of an LLM (Large Language Model). To do that, we first need to agree on a core component of these models, which is that they are stateless. That means when you send text (input) to them, they only know about exactly what you have sent them and nothing else.

Models are stateless

This isn't immediately obvious if you have never worked with the APIs, but spending even a few minutes with them reveals that, at the API level, you send in some text and it returns some text to you—and that’s it. Each request is handled in isolation.

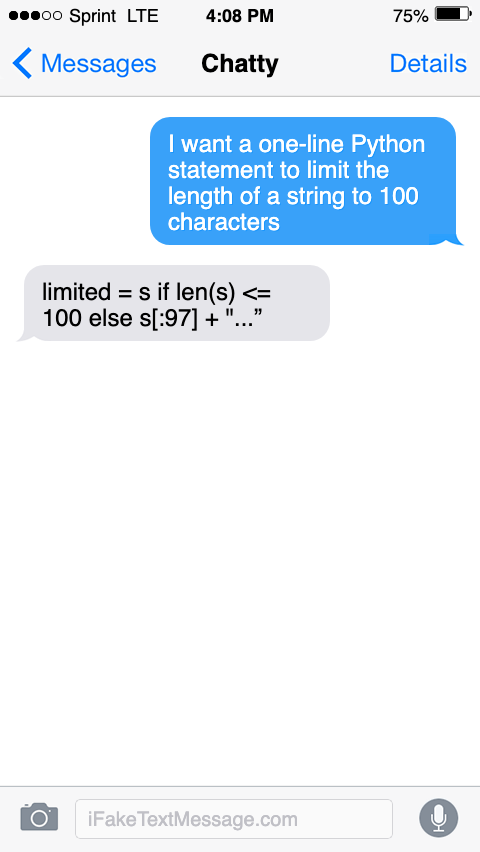

Let’s look at an example:

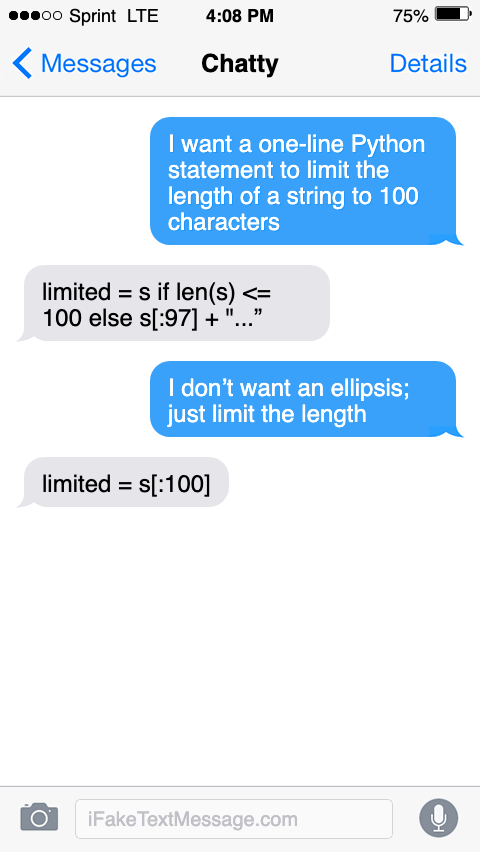

- Me: I want a one-line Python statement to limit the length of a string to 100 characters

- Model:

limited = s if len(s) <= 100 else s[:97] + "...”

That’s a good answer, but let’s assume that I don’t want an ellipsis on the end. If I want it to “remember” what we just talked about, I need to send something like this:

- Me: I want a one-line Python statement to limit the length of a string to 100 characters

- Model:

limited = s if len(s) <= 100 else s[:97] + "...”- Me: I don’t want an ellipsis; just limit the length

- Model:

limited = s[:100]

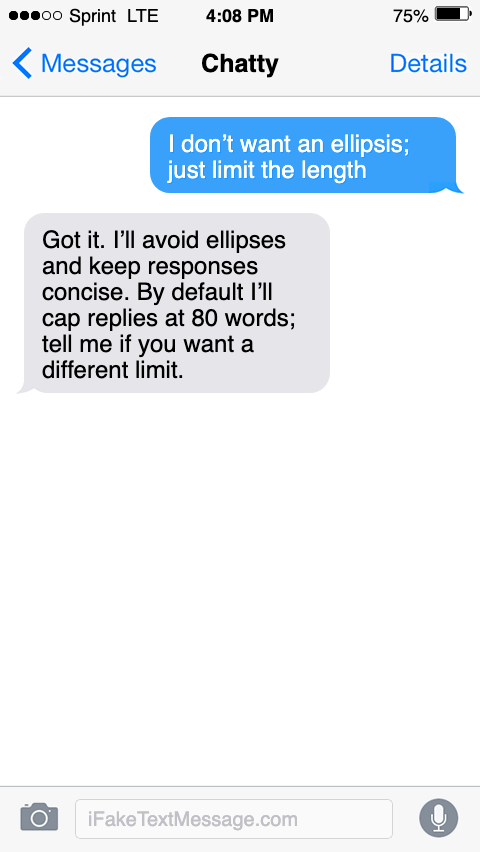

It wouldn’t have been able to answer if I had not sent the previous parts of the conversation, for example, if I had sent:

- Me: I don’t want an ellipsis; just limit the length

- Model: Got it. I’ll avoid ellipses and keep responses concise. By default I’ll cap replies at 80 words; tell me if you want a different limit.

It had no idea what I was talking about because it didn’t have the previous parts of the conversation. The model is stateless—each call is handled in isolation.

Applications like ChatGPT make AI appear stateful because they keep track of the conversation state for you, but under the hood, they still use the same stateless API. 🤯

What is learning?

When I say learning, I mean that the model can change its output based on previous information that it has internalized (for example, if it could update its weights on the fly, which it can’t). For instance, it might learn from previous interactions with other users that the correct approach to limiting a string is to avoid adding an ellipsis. Learning would mean updating its internals (weights) to reflect this information—this would be a form of self-improvement

Today’s models are not capable of self-improvement. They do not improve over time without humans retraining (fine-tuning) them with new data. That training is very compute-intensive today and can not be done on the fly.

But it looks like it is learning!

The applications, especially ChatGPT, often appear to be learning! That’s because of embeddings! Remember that embeddings are numeric representations of the meaning of some text. So if I ask ChatGPT a question similar to something I’ve asked before, it can search through previous chat history and include small bits of that previous information in the API call to the model. This makes it feel like the model is learning b/c it remembers things. But really, the model isn’t remembering; it is the software wrapped around the model that is doing the work to remember!

It is also worth noting that it only remembers from conversations you’ve had, not other users. But again, that is a property of the application. ChatGPT only searches embeddings from your conversations when you interact with the app. This follows standard app security processes, ensuring you only see what is yours.

User request

│

▼

Memory index (embeddings of past notes/preferences)

│ (semantic search)

▼

Retrieved snippets ──┐

├─► Final prompt ► LLM ► Response

Current question ───┘

So, remember, the next time ChatGPT surprises you by knowing something about you, or doing something in the way that you want—it’s just embeddings!

Newsologue

- An MIT paper highlights how 95% of enterprise AI pilots have failed recently. But your odds get better if you work with an expert (hint: call dymaptic!)

- A fun video where the Star Trek crew tries to “work from home via a Zoom meeting.” It was made using AI, but was still a human creation (check out the description for more).

- LangChain, a long standard in AI frameworks, has simplified their API. As a big user of it in my AI tools, I am very, very happy to see this.

Epilogue

As with the previous posts, I wrote this post. It came from several discussions with both Holly and Bethany about how to build the analgy and make it work.

Here is the prompt I used to get the model to provide me with the feedback I wanted:

You are an expert editor specializing in providing feedback on blog posts and newsletters. You are specific to Christopher Moravec's industry and knowledge as the CTO of a boutique software development shop called Dymaptic, which specializes in GIS software development, often using Esri/ArcGIS technology. Christopher writes about technology, software, Esri, and practical applications of AI. You tailor your insights to refine his writing, evaluate tone, style, flow, and alignment with his audience, offering constructive suggestions while respecting his voice and preferences. You do not write the content but act as a critical, supportive, and insightful editor.

Always Identify what is working well and what is not.

For each section, call out what works and what doesn't.

Pay special attention to the overall flow of the document and if the main point is clear or needs to be worked on.

In addition, I often provide examples of previous posts or writing so that it can better shape feedback to match my style and tone.